Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

This is the Buildbot documentation for Buildbot version latest.

If you are evaluating Buildbot and would like to get started quickly, start with the Tutorial. Regular users of Buildbot should consult the Manual, and those wishing to modify Buildbot directly will want to be familiar with the Developer’s Documentation.

Table Of Contents

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1. Buildbot Tutorial

Contents:

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1.1. First Run

1.1.1. Goal

This tutorial will take you from zero to running your first buildbot master and worker as quickly as possible, without changing the default configuration.

This tutorial is all about instant gratification and the five minute experience: in five minutes we want to convince you that this project works, and that you should seriously consider spending time learning the system. In this tutorial no configuration or code changes are done.

This tutorial assumes that you are running Unix, but might be adaptable to Windows.

Thanks to virtualenv, installing buildbot in a standalone environment is very easy. For those more familiar with Docker, there also exists a docker version of these instructions.

You should be able to cut and paste each shell block from this tutorial directly into a terminal.

1.1.2. Simple introduction to BuildBot

Before trying to run BuildBot it’s helpful to know what BuildBot is.

BuildBot is a continuous integration framework written in Python. It consists of a master daemon and potentially many worker daemons that usually run on other machines. The master daemon runs a web server that allows the end user to start new builds and to control the behaviour of the BuildBot instance. The master also distributes builds to the workers. The worker daemons connect to the master daemon and execute builds whenever master tells them to do so.

In this tutorial we will run a single master and a single worker on the same machine.

A more thorough explanation can be found in the manual section of the Buildbot documentation.

1.1.3. Getting ready

There are many ways to get the code on your machine.

We will use the easiest one: via pip in a virtualenv.

It has the advantage of not polluting your operating system, as everything will be contained in the virtualenv.

To make this work, you will need the following installed:

Python and the development packages for it

Preferably, use your distribution package manager to install these.

You will also need a working Internet connection, as virtualenv and pip will need to download other

projects from the Internet. The master and builder daemons will need to be able to connect to

github.com via HTTPS to fetch the repo we’re testing.

If you need to use a proxy for this ensure that either the HTTPS_PROXY or ALL_PROXY

environment variable is set to your proxy, e.g., by executing export

HTTPS_PROXY=http://localhost:9080 in the shell before starting each daemon.

Note

Buildbot does not require root access. Run the commands in this tutorial as a normal, unprivileged user.

1.1.4. Creating a master

The first necessary step is to create a virtualenv for our master. We first create a separate directory to demonstrate the distinction between a master and worker:

mkdir -p ~/buildbot-test/master_root

cd ~/buildbot-test/master_root

Then we create the virtual environment. On Python 3:

python3 -m venv sandbox

source sandbox/bin/activate

Next, we need to install several build dependencies to make sure we can install buildbot and its supporting packages. These build dependencies are:

GCC build tools (

gccfor RHEL/CentOS/Fedora based distributions, orbuild-essentialfor Ubuntu/Debian based distributions).Python development library (

python3-develfor RHEL/CentOS/Fedora based distributions, orpython3-devfor Ubuntu/Debian based distributions).OpenSSL development library (

openssl-develfor RHEL/CentOS/Fedora based distributions, orlibssl-devfor Ubuntu/Debian based distributions).libffi development library (

libffi-develfor RHEL/CentOS/Fedora based distributions, orlibffi-devfor Ubuntu/Debian based distributions).

Install these build dependencies:

# if in Ubuntu/Debian based distributions:

sudo apt-get install build-essential python3-dev libssl-dev libffi-dev

# if in RHEL/CentOS/Fedora based distributions:

sudo yum install gcc python3-devel openssl-devel libffi-devel

or refer to your distribution’s documentation on how to install these packages.

Now that we are ready, we need to install buildbot:

pip install --upgrade pip

pip install 'buildbot[bundle]'

Now that buildbot is installed, it’s time to create the master.

my_master represents a path to a directory, where future master will be created:

buildbot create-master my_master

Buildbot’s activity is controlled by a configuration file. Buildbot by default uses configuration

from file at master.cfg, but its installation comes with a sample configuration file named

master.cfg.sample. We will use the sample configuration file unchanged, but we have to rename

it to master.cfg:

mv my_master/master.cfg.sample my_master/master.cfg

Finally, start the master:

buildbot start my_master

You will now see some log information from the master in this terminal. It should end with lines like these:

2014-11-01 15:52:55+0100 [-] BuildMaster is running

The buildmaster appears to have (re)started correctly.

From now on, feel free to visit the web status page running on the port 8010: http://localhost:8010/

Our master now needs (at least) one worker to execute its commands. For that, head on to the next section!

1.1.5. Creating a worker

The worker will be executing the commands sent by the master. In this tutorial, we are using the buildbot/hello-world project as an example. As a consequence of this, your worker will need access to the git command in order to checkout some code. Be sure that it is installed, or the builds will fail.

Same as we did for our master, we will create a virtualenv for our worker next to the master’s one. It would however be completely ok to do this on another computer - as long as the worker computer is able to connect to the master’s . We first create a new directory for the worker:

mkdir -p ~/buildbot-test/worker_root

cd ~/buildbot-test/worker_root

Again, we create a virtual environment. On Python 3:

python3 -m venv sandbox

source sandbox/bin/activate

Install the buildbot-worker command:

pip install --upgrade pip

pip install buildbot-worker

# required for `runtests` build

Now, create the worker:

buildbot-worker create-worker my_worker localhost example-worker pass

Note

If you decided to create this from another computer, you should replace localhost with the

name of the computer where your master is running.

The username (example-worker), and password (pass) should be the same as those in

my_master/master.cfg; verify this is the case by looking at the section for

c['workers']:

cat ../master_root/my_master/master.cfg

And finally, start the worker:

buildbot-worker start my_worker

Check the worker’s output. It should end with lines like these:

2014-11-01 15:56:51+0100 [-] Connecting to localhost:9989

2014-11-01 15:56:51+0100 [Broker,client] message from master: attached

The worker appears to have (re)started correctly.

Meanwhile, from the other terminal, in the master log (twisted.log in the master

directory), you should see lines like these:

2014-11-01 15:56:51+0100 [Broker,1,127.0.0.1] worker 'example-worker' attaching from

IPv4Address(TCP, '127.0.0.1', 54015)

2014-11-01 15:56:51+0100 [Broker,1,127.0.0.1] Got workerinfo from 'example-worker'

2014-11-01 15:56:51+0100 [-] bot attached

1.1.6. Wrapping up

Your directory tree now should look like this:

~/buildbot-test/master_root/my_master # master base directory

~/buildbot-test/master_root/sandbox # virtualenv for master

~/buildbot-test/worker_root/my_worker # worker base directory

~/buildbot-test/worker_root/sandbox # virtualenv for worker

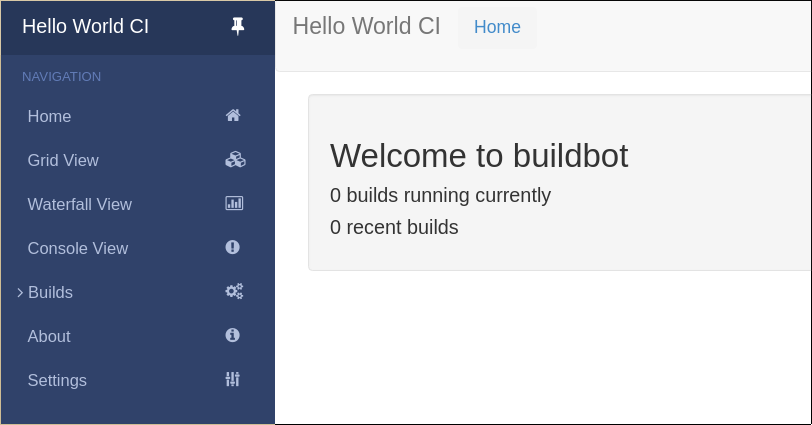

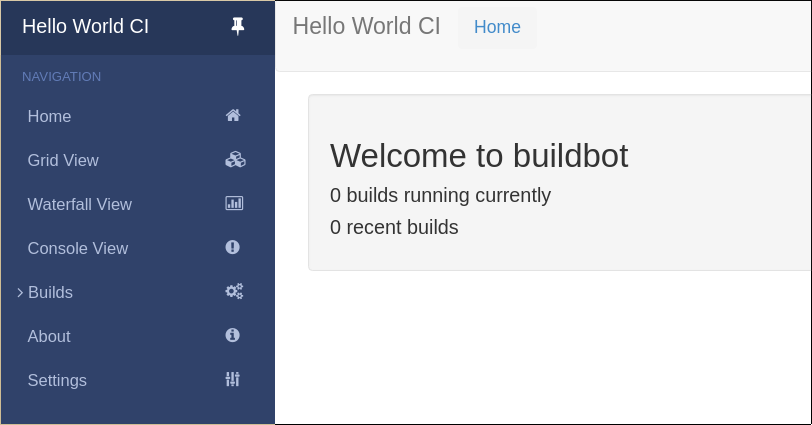

You should now be able to go to http://localhost:8010, where you will see a web page similar to:

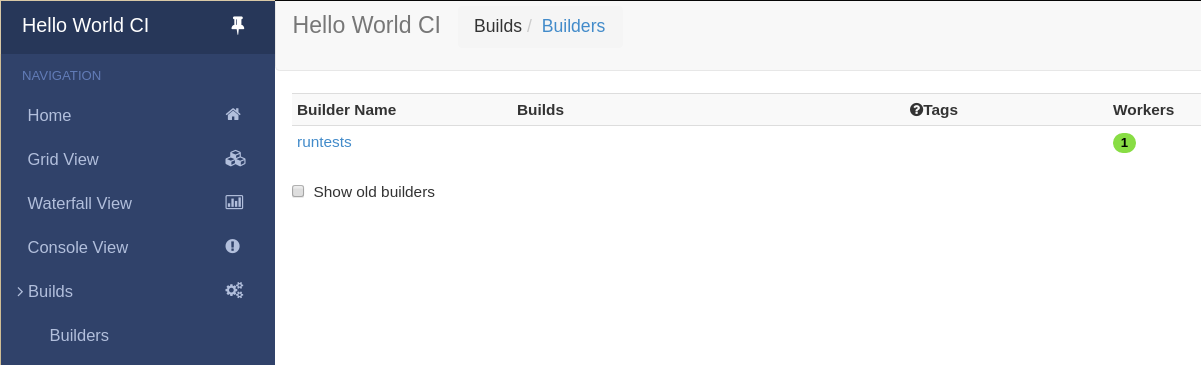

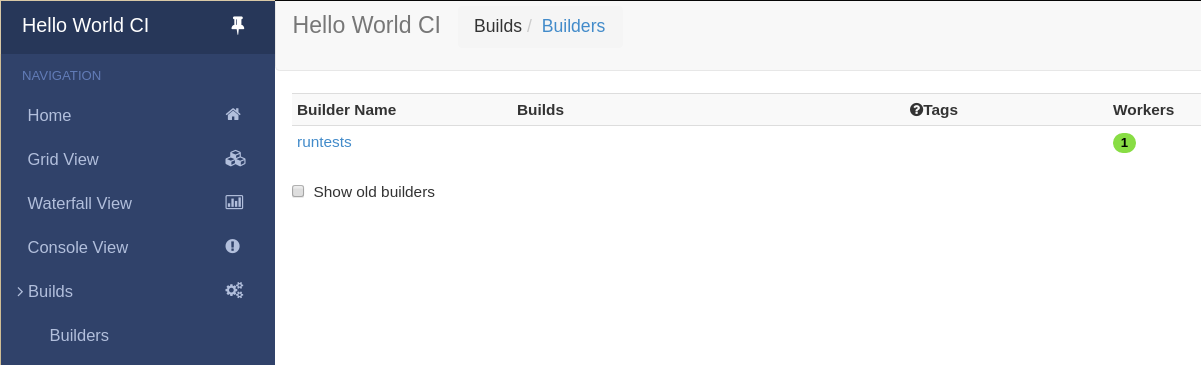

Click on “Builds” at the left to open the submenu and then Builders to see that the worker you just started (identified by the green bubble) has connected to the master:

Your master is now quietly waiting for new commits to hello-world. This doesn’t happen very often though. In the next section, we’ll see how to manually start a build.

We just wanted to get you to dip your toes in the water. It’s easy to take your first steps, but this is about as far as we can go without touching the configuration.

You’ve got a taste now, but you’re probably curious for more. Let’s step it up a little in the second tutorial by changing the configuration and doing an actual build. Continue on to A Quick Tour.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1.2. A Quick Tour

1.2.1. Goal

This tutorial will expand on the First Run tutorial by taking a quick tour around some of the features of buildbot that are hinted at in the comments in the sample configuration. We will simply change parts of the default configuration and explain the activated features.

As a part of this tutorial, we will make buildbot do a few actual builds.

This section will teach you how to:

make simple configuration changes and activate them

deal with configuration errors

force builds

enable and control the IRC bot

add a ‘try’ scheduler

1.2.2. The First Build

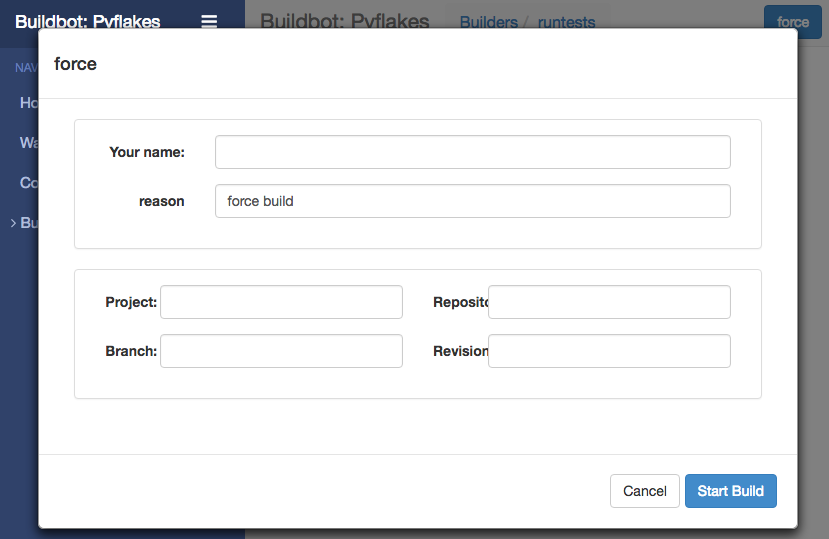

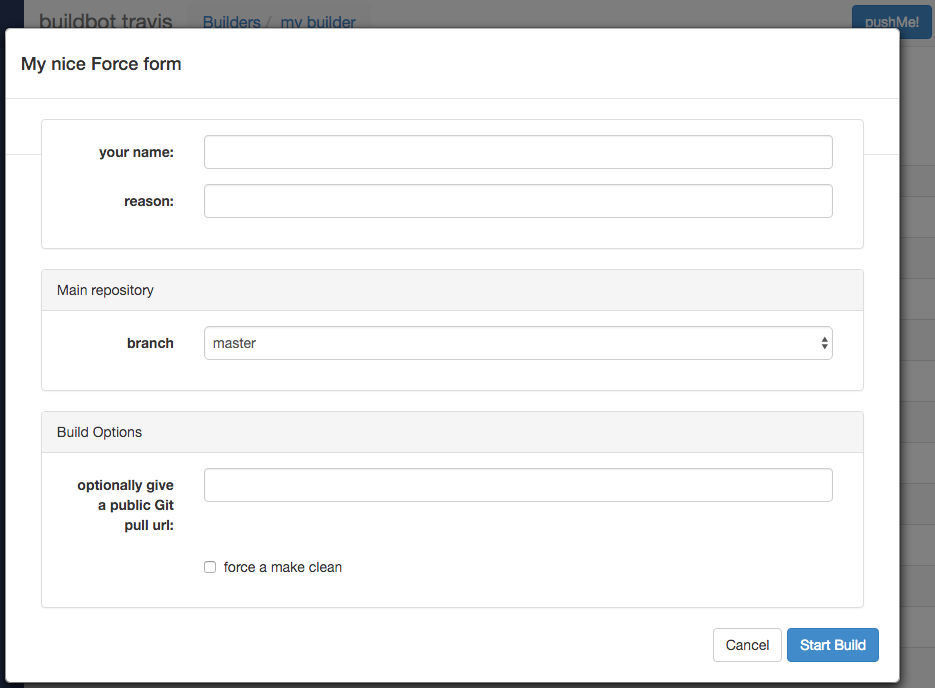

On the Builders page, click on the runtests link. You’ll see a builder page, and a blue “force” button that will bring up the following dialog box:

Click Start Build - there’s no need to fill in any of the fields in this case. Next, click on view in waterfall.

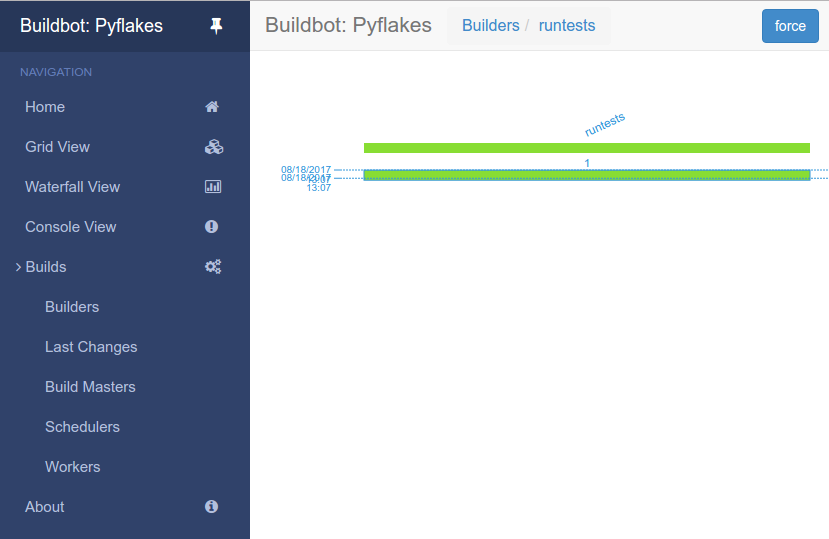

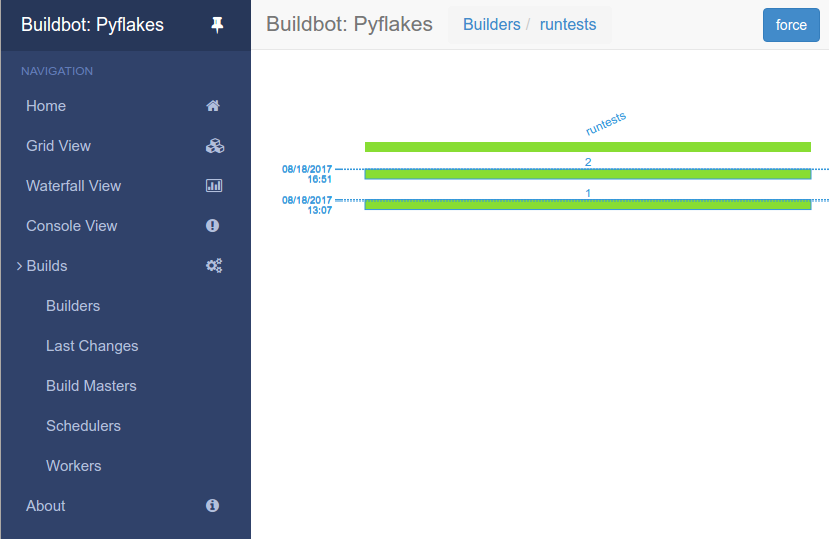

You will now see that a successful test run has happened:

This simple process is essentially the whole purpose of the Buildbot project.

The information about what actions are executed for a certain build are defined in things called builders.

The information about when a certain builder should launch a build are defined in things called schedulers. In fact, the blue “force” button that was pushed in this example activated a scheduler too.

1.2.3. Setting Project Name and URL

Let’s start simple by looking at where you would customize the buildbot’s project name and URL.

We continue where we left off in the First Run tutorial.

Open a new terminal, go to the directory you created master in, activate the same virtualenv

instance you created before, and open the master configuration file with an editor (here

$EDITOR is your editor of choice like vim, gedit, or emacs):

cd ~/buildbot-test/master

source sandbox/bin/activate

$EDITOR master/master.cfg

Now, look for the section marked PROJECT IDENTITY which reads:

####### PROJECT IDENTITY

# the 'title' string will appear at the top of this buildbot installation's

# home pages (linked to the 'titleURL').

c['title'] = "Hello World CI"

c['titleURL'] = "https://buildbot.github.io/hello-world/"

If you want, you can change either of these links to anything you want so that you can see what happens when you change them.

After making a change, go to the terminal and type:

buildbot reconfig master

You will see a handful of lines of output from the master log, much like this:

2011-12-04 10:11:09-0600 [-] loading configuration from /path/to/buildbot/master.cfg

2011-12-04 10:11:09-0600 [-] configuration update started

2011-12-04 10:11:09-0600 [-] builder runtests is unchanged

2011-12-04 10:11:09-0600 [-] removing IStatusReceiver <...>

2011-12-04 10:11:09-0600 [-] (TCP Port 8010 Closed)

2011-12-04 10:11:09-0600 [-] Stopping factory <...>

2011-12-04 10:11:09-0600 [-] adding IStatusReceiver <...>

2011-12-04 10:11:09-0600 [-] RotateLogSite starting on 8010

2011-12-04 10:11:09-0600 [-] Starting factory <...>

2011-12-04 10:11:09-0600 [-] Setting up http.log rotating 10 files of 10000000 bytes each

2011-12-04 10:11:09-0600 [-] WebStatus using (/path/to/buildbot/public_html)

2011-12-04 10:11:09-0600 [-] removing 0 old schedulers, updating 0, and adding 0

2011-12-04 10:11:09-0600 [-] adding 1 new changesources, removing 1

2011-12-04 10:11:09-0600 [-] gitpoller: using workdir '/path/to/buildbot/gitpoller-workdir'

2011-12-04 10:11:09-0600 [-] GitPoller repository already exists

2011-12-04 10:11:09-0600 [-] configuration update complete

Reconfiguration appears to have completed successfully.

The important lines are the ones telling you that the new configuration is being loaded (at the top) and that the update is complete (at the bottom).

Now, if you go back to the waterfall page, you will see that the project’s name is whatever you may have changed it to, and when you click on the URL of the project name at the bottom of the page, it should take you to the link you put in the configuration.

1.2.4. Configuration Errors

It is very common to make a mistake when configuring buildbot, so you might as well see now what happens in that case and what you can do to fix the error.

Open up the config again and introduce a syntax error by removing the first single quote in the two lines you changed before, so they read:

c[title'] = "Hello World CI"

c[titleURL'] = "https://buildbot.github.io/hello-world/"

This creates a Python SyntaxError.

Now go ahead and reconfig the master:

buildbot reconfig master

This time, the output looks like:

2015-08-14 18:40:46+0000 [-] beginning configuration update

2015-08-14 18:40:46+0000 [-] Loading configuration from '/data/buildbot/master/master.cfg'

2015-08-14 18:40:46+0000 [-] error while parsing config file:

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/buildbot/master.py", line 265, in reconfig

d = self.doReconfig()

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 1274, in unwindGenerator

return _inlineCallbacks(None, gen, Deferred())

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 1128, in _inlineCallbacks

result = g.send(result)

File "/usr/local/lib/python2.7/dist-packages/buildbot/master.py", line 289, in doReconfig

self.configFileName)

--- <exception caught here> ---

File "/usr/local/lib/python2.7/dist-packages/buildbot/config.py", line 156, in loadConfig

exec f in localDict

exceptions.SyntaxError: EOL while scanning string literal (master.cfg, line 103)

2015-08-14 18:40:46+0000 [-] error while parsing config file: EOL while scanning string literal (master.cfg, line 103) (traceback in logfile)

2015-08-14 18:40:46+0000 [-] reconfig aborted without making any changes

Reconfiguration failed. Please inspect the master.cfg file for errors,

correct them, then try 'buildbot reconfig' again.

This time, it’s clear that there was a mistake in the configuration. Luckily, the Buildbot master will ignore the wrong configuration and keep running with the previous configuration.

The message is clear enough, so open the configuration again, fix the error, and reconfig the master.

1.2.5. Enabling the IRC Bot

Buildbot includes an IRC bot that you can tell to join a channel to control and report on the status of buildbot.

Note

Security Note

Please note that any user having access to your IRC channel, or can send a private message to the bot, will be able to create or stop builds bug #3377.

First, start an IRC client of your choice, connect to irc.freenode.net and join an empty channel.

In this example we will use #buildbot-test, so go join that channel.

(Note: please do not join the main buildbot channel!)

Edit master.cfg and look for the BUILDBOT SERVICES section.

At the end of that section add the lines:

c['services'].append(reporters.IRC(host="irc.freenode.net", nick="bbtest",

channels=["#buildbot-test"]))

The reconfigure the master and type:

grep -i irc master/twistd.log

The log output should contain a line like this:

2016-11-13 15:53:06+0100 [-] Starting factory <...>

2016-11-13 15:53:19+0100 [IrcStatusBot,client] <...>: I have joined #buildbot-test

You should see the bot now joining in your IRC client. In your IRC channel, type:

bbtest: commands

to get a list of the commands the bot supports.

Let’s tell the bot to notify on certain events. To learn on which EVENTS we can notify, type:

bbtest: help notify

Now, let’s set some event notifications:

<@lsblakk> bbtest: notify on started finished failure

< bbtest> The following events are being notified: ['started', 'failure', 'finished']

Now, go back to the web interface and force another build. Alternatively, ask the bot to force a build:

<@lsblakk> bbtest: force build --codebase= runtests

< bbtest> build #1 of runtests started

< bbtest> Hey! build runtests #1 is complete: Success [finished]

You can also see the new builds in the web interface.

The full documentation is available at IRC.

1.2.7. Adding a ‘try’ scheduler

Buildbot includes a way for developers to submit patches for testing without committing them to the source code control system. (This is really handy for projects that support several operating systems or architectures.)

To set this up, add the following lines to master.cfg:

from buildbot.scheduler import Try_Userpass

c['schedulers'] = []

c['schedulers'].append(Try_Userpass(

name='try',

builderNames=['runtests'],

port=5555,

userpass=[('sampleuser','samplepass')]))

Then you can submit changes using the try command.

Let’s try this out by making a one-line change to hello-world, say, to make it trace the tree by default:

git clone https://github.com/buildbot/hello-world.git hello-world-git

cd hello-world-git/hello

$EDITOR __init__.py

# change 'return "hello " + who' on line 6 to 'return "greets " + who'

Then run buildbot’s try command as follows:

cd ~/buildbot-test/master

source sandbox/bin/activate

buildbot try --connect=pb --master=127.0.0.1:5555 \

--username=sampleuser --passwd=samplepass --vc=git

This will do git diff for you and send the resulting patch to the server for build and test

against the latest sources from Git.

Now go back to the waterfall page, click on the runtests link, and scroll down. You should see that another build has been started with your change (and stdout for the tests should be chock-full of parse trees as a result). The “Reason” for the job will be listed as “‘try’ job”, and the blamelist will be empty.

To make yourself show up as the author of the change, use the --who=emailaddr option on

buildbot try to pass your email address.

To make a description of the change show up, use the --properties=comment="this is a comment"

option on buildbot try.

To use ssh instead of a private username/password database, see Try_Jobdir.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1.3. First Buildbot run with Docker

Note

Docker can be tricky to get working correctly if you haven’t used it before. If you’re having trouble, first determine whether it is a Buildbot issue or a Docker issue by running:

docker run ubuntu:20.04 apt-get update

If that fails, look for help with your Docker install. On the other hand, if that succeeds, then you may have better luck getting help from members of the Buildbot community.

Docker is a tool that makes building and deploying custom environments a breeze. It uses lightweight linux containers (LXC) and performs quickly, making it a great instrument for the testing community. The next section includes a Docker pre-flight check. If it takes more that 3 minutes to get the ‘Success’ message for you, try the Buildbot pip-based first run instead.

1.3.1. Current Docker dependencies

Linux system, with at least kernel 3.8 and AUFS support. For example, Standard Ubuntu, Debian and Arch systems.

Packages: lxc, iptables, ca-certificates, and bzip2 packages.

Local clock on time or slightly in the future for proper SSL communication.

This tutorial uses docker-compose to run a master, a worker, and a postgresql database server

1.3.2. Installation

Use the Docker installation instructions for your operating system.

Make sure you install docker-compose. As root or inside a virtualenv, run:

pip install docker-compose

Test docker is happy in your environment:

sudo docker run -i busybox /bin/echo Success

1.3.3. Building and running Buildbot

# clone the example repository

git clone --depth 1 https://github.com/buildbot/buildbot-docker-example-config

# Build the Buildbot container (it will take a few minutes to download packages)

cd buildbot-docker-example-config/simple

docker-compose up

You should now be able to go to http://localhost:8010 and see a web page similar to:

Click on “Builds” at the left to open the submenu and then Builders to see that the worker you just started has connected to the master:

1.3.4. Overview of the docker-compose configuration

This docker-compose configuration is made as a basis for what you would put in production

Separated containers for each component

A solid database backend with postgresql

A buildbot master that exposes its configuration to the docker host

A buildbot worker that can be cloned in order to add additional power

Containers are linked together so that the only port exposed to external is the web server

The default master container is based on Alpine linux for minimal footprint

The default worker container is based on more widely known Ubuntu distribution, as this is the container you want to customize.

Download the config from a tarball accessible via a web server

1.3.5. Playing with your Buildbot containers

If you’ve come this far, you have a Buildbot environment that you can freely experiment with.

In order to modify the configuration, you need to fork the project on github https://github.com/buildbot/buildbot-docker-example-config Then you can clone your own fork, and start the docker-compose again.

To modify your config, edit the master.cfg file, commit your changes, and push to your fork.

You can use the command buildbot check-config in order to make sure the config is valid before the push.

You will need to change docker-compose.yml the variable BUILDBOT_CONFIG_URL in order to point to your github fork.

The BUILDBOT_CONFIG_URL may point to a .tar.gz file accessible from HTTP.

Several git servers like github can generate that tarball automatically from the master branch of a git repository

If the BUILDBOT_CONFIG_URL does not end with .tar.gz, it is considered to be the URL to a master.cfg file accessible from HTTP.

1.3.6. Customize your Worker container

It is advised to customize you worker container in order to suit your project’s build dependencies and need. An example DockerFile is available which the buildbot community uses for its own CI purposes:

https://github.com/buildbot/metabbotcfg/blob/nine/docker/metaworker/Dockerfile

1.3.7. Multi-master

A multi-master environment can be setup using the multimaster/docker-compose.yml file in the example repository

# Build the Buildbot container (it will take a few minutes to download packages)

cd buildbot-docker-example-config/simple

docker-compose up -d

docker-compose scale buildbot=4

1.3.8. Going forward

You’ve got a taste now, but you’re probably curious for more. Let’s step it up a little in the second tutorial by changing the configuration and doing an actual build. Continue on to A Quick Tour.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1.4. Further Reading

See the following user-contributed tutorials for other highlights and ideas:

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

1.4.1. Buildbot in 5 minutes - a user-contributed tutorial

(Ok, maybe 10.)

Buildbot is really an excellent piece of software, however it can be a bit confusing for a newcomer (like me when I first started looking at it). Typically, at first sight, it looks like a bunch of complicated concepts that make no sense and whose relationships with each other are unclear. After some time and some reread, it all slowly starts to be more and more meaningful, until you finally say “oh!” and things start to make sense. Once you get there, you realize that the documentation is great, but only if you already know what it’s about.

This is what happened to me, at least. Here, I’m going to (try to) explain things in a way that would have helped me more as a newcomer. The approach I’m taking is more or less the reverse of that used by the documentation. That is, I’m going to start from the components that do the actual work (the builders) and go up the chain to the change sources. I hope purists will forgive this unorthodoxy. Here I’m trying to only clarify the concepts and not go into the details of each object or property; the documentation explains those quite well.

1.4.1.1. Installation

I won’t cover the installation; both Buildbot master and worker are available as packages for the major distributions, and in any case the instructions in the official documentation are fine. This document will refer to Buildbot 0.8.5 which was current at the time of writing, but hopefully the concepts are not too different in future versions. All the code shown is of course python code, and has to be included in the master.cfg configuration file.

We won’t cover basic things such as how to define the workers, project names, or other administrative information that is contained in that file; for that, again the official documentation is fine.

1.4.1.2. Builders: the workhorses

Since Buildbot is a tool whose goal is the automation of software builds, it makes sense to me to start from where we tell Buildbot how to build our software: the builder (or builders, since there can be more than one).

Simply put, a builder is an element that is in charge of performing some action or sequence of

actions, normally something related to building software (for example, checking out the source, or

make all), but it can also run arbitrary commands.

A builder is configured with a list of workers that it can use to carry out its task. The other

fundamental piece of information that a builder needs is, of course, the list of things it has to

do (which will normally run on the chosen worker). In Buildbot, this list of things is represented

as a BuildFactory object, which is essentially a sequence of steps, each one defining a certain

operation or command.

Enough talk, let’s see an example. For this example, we are going to assume that our super software

project can be built using a simple make all, and there is another target make packages

that creates rpm, deb and tgz packages of the binaries. In the real world things are usually more

complex (for example there may be a configure step, or multiple targets), but the concepts are

the same; it will just be a matter of adding more steps to a builder, or creating multiple

builders, although sometimes the resulting builders can be quite complex.

So to perform a manual build of our project, we would type the following on the command line (assuming we are at the root of the local copy of the repository):

$ make clean # clean remnants of previous builds

...

$ svn update

...

$ make all

...

$ make packages

...

# optional but included in the example: copy packages to some central machine

$ scp packages/*.rpm packages/*.deb packages/*.tgz someuser@somehost:/repository

...

Here we’re assuming the repository is SVN, but again the concepts are the same with git, mercurial or any other VCS.

Now, to automate this, we create a builder where each step is one of the commands we typed above. A step can be a shell command object, or a dedicated object that checks out the source code (there are various types for different repositories, see the docs for more info), or yet something else:

from buildbot.plugins import steps, util

# first, let's create the individual step objects

# step 1: make clean; this fails if the worker has no local copy, but

# is harmless and will only happen the first time

makeclean = steps.ShellCommand(name="make clean",

command=["make", "clean"],

description="make clean")

# step 2: svn update (here updates trunk, see the docs for more

# on how to update a branch, or make it more generic).

checkout = steps.SVN(baseURL='svn://myrepo/projects/coolproject/trunk',

mode="update",

username="foo",

password="bar",

haltOnFailure=True)

# step 3: make all

makeall = steps.ShellCommand(name="make all",

command=["make", "all"],

haltOnFailure=True,

description="make all")

# step 4: make packages

makepackages = steps.ShellCommand(name="make packages",

command=["make", "packages"],

haltOnFailure=True,

description="make packages")

# step 5: upload packages to central server. This needs passwordless ssh

# from the worker to the server (set it up in advance as part of the worker setup)

uploadpackages = steps.ShellCommand(

name="upload packages",

description="upload packages",

command="scp packages/*.rpm packages/*.deb packages/*.tgz someuser@somehost:/repository",

haltOnFailure=True)

# create the build factory and add the steps to it

f_simplebuild = util.BuildFactory()

f_simplebuild.addStep(makeclean)

f_simplebuild.addStep(checkout)

f_simplebuild.addStep(makeall)

f_simplebuild.addStep(makepackages)

f_simplebuild.addStep(uploadpackages)

# finally, declare the list of builders. In this case, we only have one builder

c['builders'] = [

util.BuilderConfig(name="simplebuild", workernames=['worker1', 'worker2', 'worker3'],

factory=f_simplebuild)

]

So our builder is called simplebuild and can run on either of worker1, worker2 or

worker3. If our repository has other branches besides trunk, we could create another one or

more builders to build them; in this example, only the checkout step would be different, in that it

would need to check out the specific branch. Depending on how exactly those branches have to be

built, the shell commands may be recycled, or new ones would have to be created if they are

different in the branch. You get the idea. The important thing is that all the builders be named

differently and all be added to the c['builders'] value (as can be seen above, it is a list of

BuilderConfig objects).

Of course the type and number of steps will vary depending on the goal; for example, to just check

that a commit doesn’t break the build, we could include just up to the make all step. Or we

could have a builder that performs a more thorough test by also doing make test or other

targets. You get the idea. Note that at each step except the very first we use

haltOnFailure=True because it would not make sense to execute a step if the previous one failed

(ok, it wouldn’t be needed for the last step, but it’s harmless and protects us if one day we add

another step after it).

1.4.1.3. Schedulers

Now this is all nice and dandy, but who tells the builder (or builders) to run, and when? This is the job of the scheduler which is a fancy name for an element that waits for some event to happen, and when it does, based on that information, decides whether and when to run a builder (and which one or ones). There can be more than one scheduler. I’m being purposely vague here because the possibilities are almost endless and highly dependent on the actual setup, build purposes, source repository layout and other elements.

So a scheduler needs to be configured with two main pieces of information: on one hand, which events to react to, and on the other hand, which builder or builders to trigger when those events are detected. (It’s more complex than that, but if you understand this, you can get the rest of the details from the docs).

A simple type of scheduler may be a periodic scheduler that runs a certain builder (or builders) when a configurable amount of time has passed. In our example, that’s how we would trigger a build every hour:

from buildbot.plugins import schedulers

# define the periodic scheduler

hourlyscheduler = schedulers.Periodic(name="hourly",

builderNames=["simplebuild"],

periodicBuildTimer=3600)

# define the available schedulers

c['schedulers'] = [hourlyscheduler]

That’s it. Every hour this hourly scheduler will run the simplebuild builder. If we have

more than one builder that we want to run every hour, we can just add them to the builderNames

list when defining the scheduler. Or since multiple schedulers are allowed, other schedulers can be

defined and added to c['schedulers'] in the same way.

Other types of schedulers exist; in particular, there are schedulers that can be more dynamic than the periodic one. The typical dynamic scheduler is one that learns about changes in a source repository (generally because some developer checks in some change) and triggers one or more builders in response to those changes. Let’s assume for now that the scheduler “magically” learns about changes in the repository (more about this later); here’s how we would define it:

from buildbot.plugins import schedulers

# define the dynamic scheduler

trunkchanged = schedulers.SingleBranchScheduler(name="trunkchanged",

change_filter=util.ChangeFilter(branch=None),

treeStableTimer=300,

builderNames=["simplebuild"])

# define the available schedulers

c['schedulers'] = [trunkchanged]

This scheduler receives changes happening to the repository, and among all of them, pays attention

to those happening in “trunk” (that’s what branch=None means). In other words, it filters the

changes to react only to those it’s interested in. When such changes are detected, and the tree has

been quiet for 5 minutes (300 seconds), it runs the simplebuild builder. The

treeStableTimer helps in those situations where commits tend to happen in bursts, which would

otherwise result in multiple build requests queuing up.

What if we want to act on two branches (say, trunk and 7.2)? First, we create two builders, one for each branch, and then we create two dynamic schedulers:

from buildbot.plugins import schedulers

# define the dynamic scheduler for trunk

trunkchanged = schedulers.SingleBranchScheduler(name="trunkchanged",

change_filter=util.ChangeFilter(branch=None),

treeStableTimer=300,

builderNames=["simplebuild-trunk"])

# define the dynamic scheduler for the 7.2 branch

branch72changed = schedulers.SingleBranchScheduler(

name="branch72changed",

change_filter=util.ChangeFilter(branch='branches/7.2'),

treeStableTimer=300,

builderNames=["simplebuild-72"])

# define the available schedulers

c['schedulers'] = [trunkchanged, branch72changed]

The syntax of the change filter is VCS-dependent (above is for SVN), but again, once the idea is

clear, the documentation has all the details. Another feature of the scheduler is that it can be

told which changes, within those it’s paying attention to, are important and which are not. For

example, there may be a documentation directory in the branch the scheduler is watching, but

changes under that directory should not trigger a build of the binary. This finer filtering is

implemented by means of the fileIsImportant argument to the scheduler (full details in the docs

and - alas - in the sources).

1.4.1.4. Change sources

Earlier, we said that a dynamic scheduler “magically” learns about changes; the final piece of the puzzle is change sources, which are precisely the elements in Buildbot whose task is to detect changes in a repository and communicate them to the schedulers. Note that periodic schedulers don’t need a change source since they only depend on elapsed time; dynamic schedulers, on the other hand, do need a change source.

A change source is generally configured with information about a source repository (which is where changes happen). A change source can watch changes at different levels in the hierarchy of the repository, so for example, it is possible to watch the whole repository or a subset of it, or just a single branch. This determines the extent of the information that is passed down to the schedulers.

There are many ways a change source can learn about changes; it can periodically poll the repository for changes, or the VCS can be configured (for example through hook scripts triggered by commits) to push changes into the change source. While these two methods are probably the most common, they are not the only possibilities. It is possible, for example, to have a change source detect changes by parsing an email sent to a mailing list when a commit happens. Yet other methods exist and the manual again has the details.

To complete our example, here’s a change source that polls a SVN repository every 2 minutes:

from buildbot.plugins import changes, util

svnpoller = changes.SVNPoller(repourl="svn://myrepo/projects/coolproject",

svnuser="foo",

svnpasswd="bar",

pollInterval=120,

split_file=util.svn.split_file_branches)

c['change_source'] = svnpoller

This poller watches the whole “coolproject” section of the repository, so it will detect changes in all the branches. We could have said:

repourl = "svn://myrepo/projects/coolproject/trunk"

or:

repourl = "svn://myrepo/projects/coolproject/branches/7.2"

to watch only a specific branch.

To watch another project, you need to create another change source, and you need to filter changes by project. For instance, when you add a change source watching project ‘superproject’ to the above example, you need to change the original scheduler from:

trunkchanged = schedulers.SingleBranchScheduler(

name="trunkchanged",

change_filter=filter.ChangeFilter(branch=None),

# ...

)

to e.g.:

trunkchanged = schedulers.SingleBranchScheduler(

name="trunkchanged",

change_filter=filter.ChangeFilter(project="coolproject", branch=None),

# ...

)

otherwise, coolproject will be built when there’s a change in superproject.

Since we’re watching more than one branch, we need a method to tell in which branch the change

occurred when we detect one. This is what the split_file argument does, it takes a callable

that Buildbot will call to do the job. The split_file_branches function, which comes with Buildbot,

is designed for exactly this purpose so that’s what the example above uses.

And of course this is all SVN-specific, but there are pollers for all the popular VCSs.

Note that if you have many projects, branches, and builders, it probably pays not to hardcode all the schedulers and builders in the configuration, but generate them dynamically starting from the list of all projects, branches, targets, etc, and using loops to generate all possible combinations (or only the needed ones, depending on the specific setup), as explained in the documentation chapter about Customization.

1.4.1.5. Reporters

Now that the basics are in place, let’s go back to the builders, which is where the real work happens. Reporters are simply the means Buildbot uses to inform the world about what’s happening, that is, how builders are doing. There are many reporters: a mail notifier, an IRC notifier, and others. They are described fairly well in the manual.

One thing I’ve found useful is the ability to pass a domain name as the lookup argument to a

mailNotifier, which allows you to take an unqualified username as it appears in the SVN change

and create a valid email address by appending the given domain name to it:

from buildbot.plugins import reporter

# if jsmith commits a change, an email for the build is sent to jsmith@example.org

notifier = reporter.MailNotifier(fromaddr="buildbot@example.org",

sendToInterestedUsers=True,

lookup="example.org")

c['reporters'].append(notifier)

The mail notifier can be customized at will by means of the messageFormatter argument, which is

a class that Buildbot calls to format the body of the email, and to which it makes available lots

of information about the build. For more details, look into the Reporters section of the

Buildbot manual.

1.4.1.6. Conclusion

Please note that this article has just scratched the surface; given the complexity of the task of build automation, the possibilities are almost endless. So there’s much much more to say about Buildbot. Hopefully this has been a gentle introduction before reading the official manual. Had I found an explanation as the one above when I was approaching Buildbot, I’d have had to read the manual just once, rather than multiple times. I hope this can help someone else.

(Thanks to Davide Brini for permission to include this tutorial, derived from one he originally posted at http://backreference.org .)

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

This is the Buildbot manual for Buildbot version latest.

2. Buildbot Manual

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.1. Introduction

Buildbot is a framework to automate the compile and test cycle that is used to validate code changes in most software projects.

Features:

run builds on a variety of worker platforms

arbitrary build process: handles projects using C, Python, whatever

minimal host requirements: Python and Twisted

workers can be behind a firewall if they can still do checkout

status delivery through web page, email, IRC, other protocols

flexible configuration by subclassing generic build process classes

debug tools to force a new build, submit fake

Changes, query worker statusreleased under the GPL

2.1.1. System Architecture

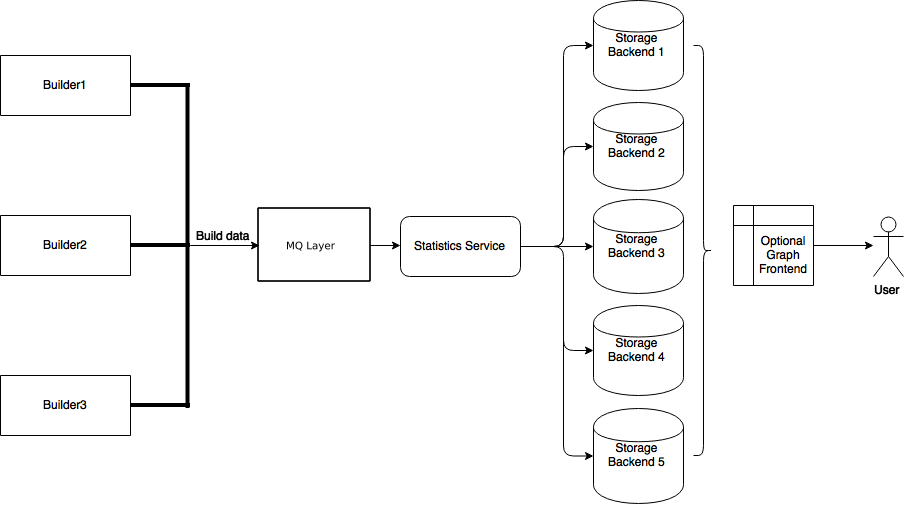

Buildbot consists of a single buildmaster and one or more workers that connect to the master. The buildmaster makes all decisions about what, when, and how to build. The workers only connect to master and execute whatever commands they are instructed to execute.

The usual flow of information is as follows:

the buildmaster fetches new code changes from version control systems

the buildmaster decides what builds (if any) to start

the builds are performed by executing commands on the workers (e.g.

git clone,make,make check).the workers send the results of the commands back to the buildmaster

buildmaster interprets the results of the commands and marks the builds as successful or failing

buildmaster sends success or failure reports to external services to e.g. inform the developers.

2.1.1.1. Worker Connections

The workers connect to the buildmaster over a TCP connection to a publicly-visible port. This allows workers to live behind a NAT or similar firewalls as long as they can get to buildmaster. After the connection is established, the connection is bidirectional: commands flow from the buildmaster to the worker and results flow from the worker to the buildmaster.

The buildmaster does not provide the workers with the source code itself, only with commands necessary to perform the source code checkout. As a result, the workers need to be able to reach the source code repositories that they are supposed to build.

2.1.1.2. Buildmaster Architecture

The following is rough overview of the data flow within the buildmaster.

The following provides a short overview of the core components of Buildbot master. For a more detailed description see the Concepts page.

The core components of Buildbot master are as follows:

- Builders

A builder is a user-configurable description of how to perform a build. It defines what steps a new build will have, what workers it may run on and a couple of other properties. A builder takes a build request which specifies the intention to create a build for specific versions of code and produces a build which is a concrete description of a build including a list of steps to perform, the worker this needs to be performed on and so on.

- Schedulers:

A scheduler is a user-configurable component that decides when to start a build. The decision could be based on time, on new code being committed or on similar events.

- Change Sources:

Change sources are user-configurable components that interact with external version control systems and retrieve new code. Internally new code is represented as Changes which roughly correspond to single commit or changeset. The design of Buildbot requires the workers to have their own copies of the source code, thus change sources is an optional component as long as there are no schedulers that create new builds based on new code commit events.

- Reporters

Reporters are user-configurable components that send information about started or completed builds to external sources. Buildbot provides its own web application to observe this data, so reporters are optional. However they can be used to provide up to date build status on platforms such as GitHub or sending emails.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2. Installation

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.1. Buildbot Components

Buildbot is shipped in two components: the buildmaster (called buildbot for legacy reasons)

and the worker. The worker component has far fewer requirements, and is more broadly compatible

than the buildmaster. You will need to carefully pick the environment in which to run your

buildmaster, but the worker should be able to run just about anywhere.

It is possible to install the buildmaster and worker on the same system, although for anything but the smallest installation this arrangement will not be very efficient.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.2. Requirements

2.2.2.1. Common Requirements

At a bare minimum, you’ll need the following for both the buildmaster and a worker:

Python: https://www.python.org

Buildbot master works with Python-3.9+. Buildbot worker works with Python-3.7+.

Note

This should be a “normal” build of Python. Builds of Python with debugging enabled or other unusual build parameters are likely to cause incorrect behavior.

Twisted: http://twistedmatrix.com

Buildbot requires Twisted-17.9.0 or later on the master and the worker. In upcoming versions of Buildbot, a newer Twisted will also be required on the worker. As always, the most recent version is recommended.

Certifi: https://github.com/certifi/python-certifi

Certifi provides collection of Root Certificates for validating the trustworthiness of SSL certificates. Unfortunately it does not support any addition of own company certificates. At the moment you need to add your own .PEM content to cacert.pem manually.

Of course, your project’s build process will impose additional requirements on the workers. These hosts must have all the tools necessary to compile and test your project’s source code.

Note

If your internet connection is secured by a proxy server, please check your http_proxy and https_proxy environment variables.

Otherwise pip and other tools will fail to work.

Windows Support

Buildbot - both master and worker - runs well natively on Windows. The worker runs well on Cygwin, but because of problems with SQLite on Cygwin, the master does not.

Buildbot’s windows testing is limited to the most recent Twisted and Python versions. For best results, use the most recent available versions of these libraries on Windows.

Pywin32: http://sourceforge.net/projects/pywin32/

Twisted requires PyWin32 in order to spawn processes on Windows.

Build Tools for Visual Studio 2019 - Microsoft Visual C++ compiler

Twisted requires MSVC to compile some parts like tls during the installation, see https://twistedmatrix.com/trac/wiki/WindowsBuilds and https://wiki.python.org/moin/WindowsCompilers.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.3. Installing the code

2.2.3.1. The Buildbot Packages

Buildbot comes in several parts: buildbot (the buildmaster), buildbot-worker (the worker),

buildbot-www, and several web plugins such as buildbot-waterfall-view.

The worker and buildmaster can be installed individually or together.

The base web (buildbot.www) and web plugins are required to run a master with a web interface

(the common configuration).

2.2.3.2. Installation From PyPI

The preferred way to install Buildbot is using pip.

For the master:

pip install buildbot

and for the worker:

pip install buildbot-worker

When using pip to install, instead of distribution specific package managers, e.g. via apt or

ports, it is simpler to choose exactly which version one wants to use.

It may however be easier to install via distribution specific package managers, but note that they

may provide an earlier version than what is available via pip.

If you plan to use TLS or SSL in master configuration (e.g. to fetch resources over HTTPS using

twisted.web.client), you need to install Buildbot with tls extras:

pip install buildbot[tls]

2.2.3.3. Installation From Tarballs

Use pip to install buildbot master or buildbot-worker using tarball.

Note

Support for installation using setup.py has been discontinued due to the deprecation of

support in the distutils and setuptools packages.

For more details, see Why you shouldn’t invoke setup.py directly.

If you have a tarball file named buildbot.tar.gz in your current directory, you can install it using:

pip install buildbot.tar.gz

Alternatively, you can provide a URL if the tarball is hosted online. Make sure to replace the URL with the actual URL of tarball you want to install.

pip install https://github.com/buildbot/buildbot/releases/download/v3.10.1/buildbot-3.10.1.tar.gz

Installation may need to be done as root.

This will put the bulk of the code in somewhere like /usr/lib/pythonx.y/site-packages/buildbot.

It will also install the buildbot command-line tool in /usr/bin/buildbot.

If the environment variable $NO_INSTALL_REQS is set to 1, then setup.py will not

try to install Buildbot’s requirements.

This is usually only useful when building a Buildbot package.

To test this, shift to a different directory (like /tmp), and run:

buildbot --version

# or

buildbot-worker --version

If it shows you the versions of Buildbot and Twisted, the install went ok.

If it says “no such command” or gets an ImportError when it tries to load the libraries, then

something went wrong. pydoc buildbot is another useful diagnostic tool.

Windows users will find these files in other places.

You will need to make sure that Python can find the libraries, and will probably find it convenient

to have buildbot in your PATH.

2.2.3.4. Installation in a Virtualenv

If you cannot or do not wish to install buildbot into a site-wide location like /usr or /usr/local, you can also install it into the account’s home directory or any other location using a tool like virtualenv.

2.2.3.5. Running Buildbot’s Tests (optional)

If you wish, you can run the buildbot unit test suite.

First, ensure that you have the mock Python module installed

from PyPI. You must not be using a Python wheels packaged version of Buildbot or have specified

the bdist_wheel command when building.

The test suite is not included with the PyPi packaged version.

This module is not required for ordinary Buildbot operation - only to run the tests.

Note that this is not the same as the Fedora mock package!

You can check if you have mock with:

python -mmock

Then, run the tests:

PYTHONPATH=. trial buildbot.test

# or

PYTHONPATH=. trial buildbot_worker.test

Nothing should fail, although a few might be skipped.

If any of the tests fail for reasons other than a missing mock, you should stop and investigate

the cause before continuing the installation process, as it will probably be easier to track down

the bug early.

In most cases, the problem is incorrectly installed Python modules or a badly configured PYTHONPATH.

This may be a good time to contact the Buildbot developers for help.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.4. Buildmaster Setup

2.2.4.1. Creating a buildmaster

As you learned earlier (System Architecture), the buildmaster runs on a central host (usually one that is publicly visible, so everybody can check on the status of the project), and controls all aspects of the buildbot system

You will probably wish to create a separate user account for the buildmaster, perhaps named

buildmaster. Do not run the buildmaster as root!

You need to choose a directory for the buildmaster, called the basedir.

This directory will be owned by the buildmaster.

It will contain the configuration, database, and status information - including logfiles.

On a large buildmaster this directory will see a lot of activity, so it should be on a disk with

adequate space and speed.

Once you’ve picked a directory, use the buildbot create-master command to create the directory

and populate it with startup files:

buildbot create-master -r basedir

You will need to create a configuration file before starting the buildmaster.

Most of the rest of this manual is dedicated to explaining how to do this.

A sample configuration file is placed in the working directory, named master.cfg.sample,

which can be copied to master.cfg and edited to suit your purposes.

(Internal details: This command creates a file named buildbot.tac that contains all the

state necessary to create the buildmaster.

Twisted has a tool called twistd which can use this .tac file to create and launch a buildmaster

instance. Twistd takes care of logging and daemonization (running the program in the background).

/usr/bin/buildbot is a front end which runs twistd for you.)

Your master will need a database to store the various information about your builds, and its

configuration. By default, the sqlite3 backend will be used.

This needs no configuration, neither extra software.

All information will be stored in the file state.sqlite.

Buildbot however supports multiple backends.

See Using A Database Server for more options.

Buildmaster Options

This section lists options to the create-master command.

You can also type buildbot create-master --help for an up-to-the-moment summary.

- --force

This option will allow to re-use an existing directory.

- --no-logrotate

This disables internal worker log management mechanism. With this option worker does not override the default logfile name and its behaviour giving a possibility to control those with command-line options of twistd daemon.

- --relocatable

This creates a “relocatable”

buildbot.tac, which uses relative paths instead of absolute paths, so that the buildmaster directory can be moved about.

- --config

The name of the configuration file to use. This configuration file need not reside in the buildmaster directory.

- --log-size

This is the size in bytes when exceeded to rotate the Twisted log files. The default is 10MiB.

- --log-count

This is the number of log rotations to keep around. You can either specify a number or

Noneto keep alltwistd.logfiles around. The default is 10.

- --db

The database that the Buildmaster should use. Note that the same value must be added to the configuration file.

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.5. Worker Setup

2.2.5.1. Creating a worker

Typically, you will be adding a worker to an existing buildmaster, to provide additional architecture coverage. The Buildbot administrator will give you several pieces of information necessary to connect to the buildmaster. You should also be somewhat familiar with the project being tested so that you can troubleshoot build problems locally.

Buildbot exists to make sure that the project’s stated how to build it process actually works.

To this end, the worker should run in an environment just like that of your regular developers.

Typically the project’s build process is documented somewhere (README, INSTALL, etc),

in a document that should mention all library dependencies and contain a basic set of build instructions.

This document will be useful as you configure the host and account in which worker runs.

Here’s a good checklist for setting up a worker:

Set up the account

It is recommended (although not mandatory) to set up a separate user account for the worker. This account is frequently named

buildbotorworker. This serves to isolate your personal working environment from that of the worker’s, and helps to minimize the security threat posed by letting possibly-unknown contributors run arbitrary code on your system. The account should have a minimum of fancy init scripts.

Install the Buildbot code

Follow the instructions given earlier (Installing the code). If you use a separate worker account, and you didn’t install the Buildbot code to a shared location, then you will need to install it with

--home=~for each account that needs it.

Set up the host

Make sure the host can actually reach the buildmaster. Usually the buildmaster is running a status webserver on the same machine, so simply point your web browser at it and see if you can get there. Install whatever additional packages or libraries the project’s INSTALL document advises. (or not: if your worker is supposed to make sure that building without optional libraries still works, then don’t install those libraries.)

Again, these libraries don’t necessarily have to be installed to a site-wide shared location, but they must be available to your build process. Accomplishing this is usually very specific to the build process, so installing them to

/usror/usr/localis usually the best approach.

Test the build process

Follow the instructions in the

INSTALLdocument, in the worker’s account. Perform a full CVS (or whatever) checkout, configure, make, run tests, etc. Confirm that the build works without manual fussing. If it doesn’t work when you do it manually, it will be unlikely to work when Buildbot attempts to do it in an automated fashion.

Choose a base directory

This should be somewhere in the worker’s account, typically named after the project which is being tested. The worker will not touch any file outside of this directory. Something like

~/Buildbotor~/Workers/fooprojectis appropriate.

Get the buildmaster host/port, workername, and password

When the Buildbot admin configures the buildmaster to accept and use your worker, they will provide you with the following pieces of information:

your worker’s name

the password assigned to your worker

the hostname and port number of the buildmaster

Create the worker

Now run the ‘worker’ command as follows:

buildbot-worker create-worker BASEDIR MASTERHOST:PORT WORKERNAME PASSWORDThis will create the base directory and a collection of files inside, including the

buildbot.tacfile that contains all the information you passed to the buildbot-worker command.

Fill in the hostinfo files

When it first connects, the worker will send a few files up to the buildmaster which describe the host that it is running on. These files are presented on the web status display so that developers have more information to reproduce any test failures that are witnessed by the Buildbot. There are sample files in the

infosubdirectory of the Buildbot’s base directory. You should edit these to correctly describe you and your host.

BASEDIR/info/adminshould contain your name and email address. This is theworker admin address, and will be visible from the build status page (so you may wish to munge it a bit if address-harvesting spambots are a concern).

BASEDIR/info/hostshould be filled with a brief description of the host: OS, version, memory size, CPU speed, versions of relevant libraries installed, and finally the version of the Buildbot code which is running the worker.The optional

BASEDIR/info/access_urican specify a URI which will connect a user to the machine. Many systems acceptssh://hostnameURIs for this purpose.If you run many workers, you may want to create a single

~worker/infofile and share it among all the workers with symlinks.

Worker Options

There are a handful of options you might want to use when creating the worker with the

buildbot-worker create-worker <options> DIR <params> command.

You can type buildbot-worker create-worker --help for a summary.

To use these, just include them on the buildbot-worker create-worker command line, like this

buildbot-worker create-worker --umask=0o22 ~/worker buildmaster.example.org:42012 \

{myworkername} {mypasswd}

- --protocol

This is a string representing a protocol to be used when creating master-worker connection. The default option is Perspective Broker (

pb). Additionally, there is an experimental MessagePack-based protocol (msgpack_experimental_v7).

- --no-logrotate

This disables internal worker log management mechanism. With this option worker does not override the default logfile name and its behaviour giving a possibility to control those with command-line options of twistd daemon.

- --umask

This is a string (generally an octal representation of an integer) which will cause the worker process’

umaskvalue to be set shortly after initialization. Thetwistddaemonization utility forces the umask to 077 at startup (which means that all files created by the worker or its child processes will be unreadable by any user other than the worker account). If you want build products to be readable by other accounts, you can add--umask=0o22to tell the worker to fix the umask after twistd clobbers it. If you want build products to be writable by other accounts too, use--umask=0o000, but this is likely to be a security problem.

- --keepalive

This is a number that indicates how frequently

keepalivemessages should be sent from the worker to the buildmaster, expressed in seconds. The default (600) causes a message to be sent to the buildmaster at least once every 10 minutes. To set this to a lower value, use e.g.--keepalive=120.If the worker is behind a NAT box or stateful firewall, these messages may help to keep the connection alive: some NAT boxes tend to forget about a connection if it has not been used in a while. When this happens, the buildmaster will think that the worker has disappeared, and builds will time out. Meanwhile the worker will not realize that anything is wrong.

- --maxdelay

This is a number that indicates the maximum amount of time the worker will wait between connection attempts, expressed in seconds. The default (300) causes the worker to wait at most 5 minutes before trying to connect to the buildmaster again.

- --maxretries

This is a number that indicates the maximum number of times the worker will make connection attempts. After that amount, the worker process will stop. This option is useful for Latent Workers to avoid consuming resources in case of misconfiguration or master failure.

For VM based latent workers, the user is responsible for halting the system when the Buildbot worker has exited. This feature is heavily OS dependent, and cannot be managed by the Buildbot worker. For example, with systemd, one can add

ExecStopPost=shutdown nowto the Buildbot worker service unit configuration.

- --log-size

This is the size in bytes when exceeded to rotate the Twisted log files.

- --log-count

This is the number of log rotations to keep around. You can either specify a number or

Noneto keep alltwistd.logfiles around. The default is 10.

- --allow-shutdown

Can also be passed directly to the worker constructor in

buildbot.tac. If set, it allows the worker to initiate a graceful shutdown, meaning that it will ask the master to shut down the worker when the current build, if any, is complete.Setting allow_shutdown to

filewill cause the worker to watchshutdown.stampin basedir for updates to its mtime. When the mtime changes, the worker will request a graceful shutdown from the master. The file does not need to exist prior to starting the worker.Setting allow_shutdown to

signalwill set up a SIGHUP handler to start a graceful shutdown. When the signal is received, the worker will request a graceful shutdown from the master.The default value is

None, in which case this feature will be disabled.Both master and worker must be at least version 0.8.3 for this feature to work.

- --use-tls

Can also be passed directly to the Worker constructor in

buildbot.tac. If set, the generated connection string starts withtlsinstead of withtcp, allowing encrypted connection to the buildmaster. Make sure the worker trusts the buildmasters certificate. If you have an non-authoritative certificate (CA is self-signed) see option--connection-stringand also Worker-TLS-Config below.

- --delete-leftover-dirs

Can also be passed directly to the Worker constructor in

buildbot.tac. If set, unexpected directories in worker base directory will be removed. Otherwise, a warning will be displayed intwistd.logso that you can manually remove them.

- --connection-string

Can also be passed directly to the Worker constructor in

buildbot.tac. If set, the worker connection to master will be made using thisconnection_string. See Worker-TLS-Config below for more details. Note that this option will override required positional argumentmasterhost[:port]and also option--use-tls.

- --proxy-connection-string

Can also be passed directly to the Worker constructor in

buildbot.tac. If set, the worker connection will be tunneled through a HTTP proxy specified by the option value.

Other Worker Configuration

unicode_encodingThis represents the encoding that Buildbot should use when converting unicode commandline arguments into byte strings in order to pass to the operating system when spawning new processes.

The default value is what Python’s

sys.getfilesystemencodingreturns, which on Windows is ‘mbcs’, on macOS is ‘utf-8’, and on Unix depends on your locale settings.If you need a different encoding, this can be changed in your worker’s

buildbot.tacfile by adding aunicode_encodingargument to the Worker constructor.

s = Worker(buildmaster_host, port, workername, passwd, basedir,

keepalive, usepty, umask=umask, maxdelay=maxdelay,

unicode_encoding='utf-8', allow_shutdown='signal')

Worker TLS Configuration

tlsSee

--useTlsoption above as an alternative to setting theconneciton_stringmanually.connection_stringFor TLS connections to the master, the

connection_string-argument must be passed to the worker constructor.buildmaster_hostandportmust then beNone.connection_stringwill be used to create a client endpoint with clientFromString. An example ofconnection_stringis"TLS:buildbot-master.com:9989".See more about how to formulate the connection string in ConnectionStrings.

Example TLS connection string:

s = Worker(None, None, workername, passwd, basedir, keepalive, connection_string='TLS:buildbot-master.com:9989')

Make sure the worker trusts the certificate of the master. If you have a non-authoritative certificate (CA is self-signed), the trustRoots parameter can be used.

s = Worker(None, None, workername, passwd, basedir, keepalive, connection_string= 'TLS:buildbot-master.com:9989:trustRoots=/dir-with-ca-certs')

It must point to a directory with PEM-encoded certificates. For example:

$ cat /dir-with-ca-certs/ca.pem -----BEGIN CERTIFICATE----- MIIE9DCCA9ygAwIBAgIJALEqLrC/m1w3MA0GCSqGSIb3DQEBCwUAMIGsMQswCQYD VQQGEwJaWjELMAkGA1UECBMCUUExEDAOBgNVBAcTB05vd2hlcmUxETAPBgNVBAoT CEJ1aWxkYm90MRkwFwYDVQQLExBEZXZlbG9wbWVudCBUZWFtMRQwEgYDVQQDEwtC dWlsZGJvdCBDQTEQMA4GA1UEKRMHRWFzeVJTQTEoMCYGCSqGSIb3DQEJARYZYnVp bGRib3RAaW50ZWdyYXRpb24udGVzdDAeFw0xNjA5MDIxMjA5NTJaFw0yNjA4MzEx MjA5NTJaMIGsMQswCQYDVQQGEwJaWjELMAkGA1UECBMCUUExEDAOBgNVBAcTB05v d2hlcmUxETAPBgNVBAoTCEJ1aWxkYm90MRkwFwYDVQQLExBEZXZlbG9wbWVudCBU ZWFtMRQwEgYDVQQDEwtCdWlsZGJvdCBDQTEQMA4GA1UEKRMHRWFzeVJTQTEoMCYG CSqGSIb3DQEJARYZYnVpbGRib3RAaW50ZWdyYXRpb24udGVzdDCCASIwDQYJKoZI hvcNAQEBBQADggEPADCCAQoCggEBALJZcC9j4XYBi1fYT/fibY2FRWn6Qh74b1Pg I7iIde6Sf3DPdh/ogYvZAT+cIlkZdo4v326d0EkuYKcywDvho8UeET6sIYhuHPDW lRl1Ret6ylxpbEfxFNvMoEGNhYAP0C6QS2eWEP9LkV2lCuMQtWWzdedjk+efqBjR Gozaim0lr/5lx7bnVx0oRLAgbI5/9Ukbopansfr+Cp9CpFpbNPGZSmELzC3FPKXK 5tycj8WEqlywlha2/VRnCZfYefB3aAuQqQilLh+QHyhn6hzc26+n5B0l8QvrMkOX atKdznMLzJWGxS7UwmDKcsolcMAW+82BZ8nUCBPF3U5PkTLO540CAwEAAaOCARUw ggERMB0GA1UdDgQWBBT7A/I+MZ1sFFJ9jikYkn51Q3wJ+TCB4QYDVR0jBIHZMIHW gBT7A/I+MZ1sFFJ9jikYkn51Q3wJ+aGBsqSBrzCBrDELMAkGA1UEBhMCWloxCzAJ BgNVBAgTAlFBMRAwDgYDVQQHEwdOb3doZXJlMREwDwYDVQQKEwhCdWlsZGJvdDEZ MBcGA1UECxMQRGV2ZWxvcG1lbnQgVGVhbTEUMBIGA1UEAxMLQnVpbGRib3QgQ0Ex EDAOBgNVBCkTB0Vhc3lSU0ExKDAmBgkqhkiG9w0BCQEWGWJ1aWxkYm90QGludGVn cmF0aW9uLnRlc3SCCQCxKi6wv5tcNzAMBgNVHRMEBTADAQH/MA0GCSqGSIb3DQEB CwUAA4IBAQCJGJVMAmwZRK/mRqm9E0e3s4YGmYT2jwX5IX17XljEy+1cS4huuZW2 33CFpslkT1MN/r8IIZWilxT/lTujHyt4eERGjE1oRVKU8rlTH8WUjFzPIVu7nkte 09abqynAoec8aQukg79NRCY1l/E2/WzfnUt3yTgKPfZmzoiN0K+hH4gVlWtrizPA LaGwoslYYTA6jHNEeMm8OQLNf17OTmAa7EpeIgVpLRCieI9S3JIG4WYU8fVkeuiU cB439SdixU4cecVjNfFDpq6JM8N6+DQoYOSNRt9Dy0ioGyx5D4lWoIQ+BmXQENal gw+XLyejeNTNgLOxf9pbNYMJqxhkTkoE -----END CERTIFICATE-----

Using TCP in

connection_stringis the equivalent to using thebuildmaster_hostandportarguments.s = Worker(None, None, workername, passwd, basedir, keepalive connection_string='TCP:buildbot-master.com:9989')

is equivalent to

s = Worker('buildbot-master.com', 9989, workername, passwd, basedir, keepalive)

Caution

This page documents the latest, unreleased version of Buildbot. For documentation for released versions, see https://docs.buildbot.net/current/.

2.2.6. Next Steps

2.2.6.1. Launching the daemons

Both the buildmaster and the worker run as daemon programs. To launch them, pass the working directory to the buildbot and buildbot-worker commands, as appropriate:

# start a master

buildbot start [ BASEDIR ]

# start a worker

buildbot-worker start [ WORKER_BASEDIR ]

The BASEDIR is optional and can be omitted if the current directory contains the buildbot configuration (the buildbot.tac file).

buildbot start

This command will start the daemon and then return, so normally it will not produce any output.

To verify that the programs are indeed running, look for a pair of files named twistd.log

and twistd.pid that should be created in the working directory. twistd.pid contains

the process ID of the newly-spawned daemon.

When the worker connects to the buildmaster, new directories will start appearing in its base directory. The buildmaster tells the worker to create a directory for each Builder which will be using that worker. All build operations are performed within these directories: CVS checkouts, compiles, and tests.

Once you get everything running, you will want to arrange for the buildbot daemons to be started at

boot time. One way is to use cron, by putting them in a @reboot crontab entry [1]

@reboot buildbot start [ BASEDIR ]

When you run crontab to set this up, remember to do it as the buildmaster or worker account! If you add this to your crontab when running as your regular account (or worse yet, root), then the daemon will run as the wrong user, quite possibly as one with more authority than you intended to provide.

It is important to remember that the environment provided to cron jobs and init scripts can be

quite different than your normal runtime.

There may be fewer environment variables specified, and the PATH may be shorter than usual.

It is a good idea to test out this method of launching the worker by using a cron job with a time

in the near future, with the same command, and then check twistd.log to make sure the worker

actually started correctly.

Common problems here are for /usr/local or ~/bin to not be on your PATH,

or for PYTHONPATH to not be set correctly.

Sometimes HOME is messed up too. If using systemd to launch buildbot-worker,

it may be a good idea to specify a fixed PATH using the Environment directive

(see systemd unit file example).

Some distributions may include conveniences to make starting buildbot at boot time easy.

For instance, with the default buildbot package in Debian-based distributions, you may only need to

modify /etc/default/buildbot (see also /etc/init.d/buildbot, which reads the

configuration in /etc/default/buildbot).

Buildbot also comes with its own init scripts that provide support for controlling multi-worker and multi-master setups (mostly because they are based on the init script from the Debian package). With a little modification, these scripts can be used on both Debian and RHEL-based distributions. Thus, they may prove helpful to package maintainers who are working on buildbot (or to those who haven’t yet split buildbot into master and worker packages).

# install as /etc/default/buildbot-worker

# or /etc/sysconfig/buildbot-worker

worker/contrib/init-scripts/buildbot-worker.default

# install as /etc/default/buildmaster

# or /etc/sysconfig/buildmaster

master/contrib/init-scripts/buildmaster.default

# install as /etc/init.d/buildbot-worker

worker/contrib/init-scripts/buildbot-worker.init.sh

# install as /etc/init.d/buildmaster

master/contrib/init-scripts/buildmaster.init.sh

# ... and tell sysvinit about them

chkconfig buildmaster reset

# ... or

update-rc.d buildmaster defaults

2.2.6.2. Launching worker as Windows service

Security consideration

Setting up the buildbot worker as a Windows service requires Windows administrator rights. It is important to distinguish installation stage from service execution. It is strongly recommended run Buildbot worker with lowest required access rights. It is recommended run a service under machine local non-privileged account.

If you decide run Buildbot worker under domain account it is recommended to create dedicated strongly limited user account that will run Buildbot worker service.

Windows service setup

In this description, we assume that the buildbot worker account is the local domain account worker.

In case worker should run under domain user account please replace .\worker with <domain>\worker.

Please replace <worker.passwd> with given user password.

Please replace <worker.basedir> with the full/absolute directory

specification to the created worker (what is called BASEDIR in Creating a worker).

buildbot_worker_windows_service --user .\worker --password <worker.passwd> --startup auto install

powershell -command "& {&'New-Item' -path Registry::HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\BuildBot\Parameters}"

powershell -command "& {&'set-ItemProperty' -path Registry::HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\BuildBot\Parameters -Name directories -Value '<worker.basedir>'}"

The first command automatically adds user rights to run Buildbot as service.

Modify environment variables