This is the BuildBot manual for Buildbot version 0.8.2.

Copyright (C) 2005, 2006, 2009, 2010 Brian Warner

Copying and distribution of this file, with or without modification, are permitted in any medium without royalty provided the copyright notice and this notice are preserved.

The BuildBot is a system to automate the compile/test cycle required by most software projects to validate code changes. By automatically rebuilding and testing the tree each time something has changed, build problems are pinpointed quickly, before other developers are inconvenienced by the failure. The guilty developer can be identified and harassed without human intervention. By running the builds on a variety of platforms, developers who do not have the facilities to test their changes everywhere before checkin will at least know shortly afterwards whether they have broken the build or not. Warning counts, lint checks, image size, compile time, and other build parameters can be tracked over time, are more visible, and are therefore easier to improve.

The overall goal is to reduce tree breakage and provide a platform to run tests or code-quality checks that are too annoying or pedantic for any human to waste their time with. Developers get immediate (and potentially public) feedback about their changes, encouraging them to be more careful about testing before checkin.

Features:

The Buildbot was inspired by a similar project built for a development

team writing a cross-platform embedded system. The various components

of the project were supposed to compile and run on several flavors of

unix (linux, solaris, BSD), but individual developers had their own

preferences and tended to stick to a single platform. From time to

time, incompatibilities would sneak in (some unix platforms want to

use string.h, some prefer strings.h), and then the tree

would compile for some developers but not others. The buildbot was

written to automate the human process of walking into the office,

updating a tree, compiling (and discovering the breakage), finding the

developer at fault, and complaining to them about the problem they had

introduced. With multiple platforms it was difficult for developers to

do the right thing (compile their potential change on all platforms);

the buildbot offered a way to help.

Another problem was when programmers would change the behavior of a library without warning its users, or change internal aspects that other code was (unfortunately) depending upon. Adding unit tests to the codebase helps here: if an application's unit tests pass despite changes in the libraries it uses, you can have more confidence that the library changes haven't broken anything. Many developers complained that the unit tests were inconvenient or took too long to run: having the buildbot run them reduces the developer's workload to a minimum.

In general, having more visibility into the project is always good, and automation makes it easier for developers to do the right thing. When everyone can see the status of the project, developers are encouraged to keep the tree in good working order. Unit tests that aren't run on a regular basis tend to suffer from bitrot just like code does: exercising them on a regular basis helps to keep them functioning and useful.

The current version of the Buildbot is additionally targeted at distributed free-software projects, where resources and platforms are only available when provided by interested volunteers. The buildslaves are designed to require an absolute minimum of configuration, reducing the effort a potential volunteer needs to expend to be able to contribute a new test environment to the project. The goal is for anyone who wishes that a given project would run on their favorite platform should be able to offer that project a buildslave, running on that platform, where they can verify that their portability code works, and keeps working.

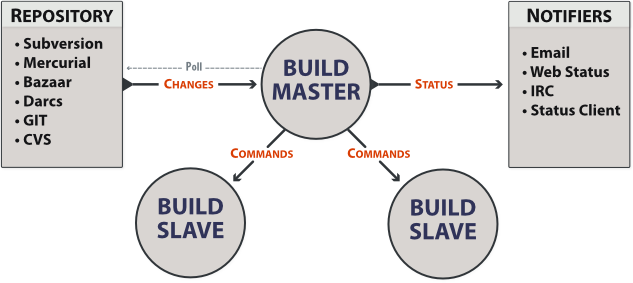

The Buildbot consists of a single buildmaster and one or more

buildslaves, connected in a star topology. The buildmaster

makes all decisions about what, when, and how to build. It sends

commands to be run on the build slaves, which simply execute the

commands and return the results. (certain steps involve more local

decision making, where the overhead of sending a lot of commands back

and forth would be inappropriate, but in general the buildmaster is

responsible for everything).

The buildmaster is usually fed Changes by some sort of version control

system (see Change Sources), which may cause builds to be run. As the

builds are performed, various status messages are produced, which are then sent

to any registered Status Targets (see Status Targets).

The buildmaster is configured and maintained by the “buildmaster admin”, who is generally the project team member responsible for build process issues. Each buildslave is maintained by a “buildslave admin”, who do not need to be quite as involved. Generally slaves are run by anyone who has an interest in seeing the project work well on their favorite platform.

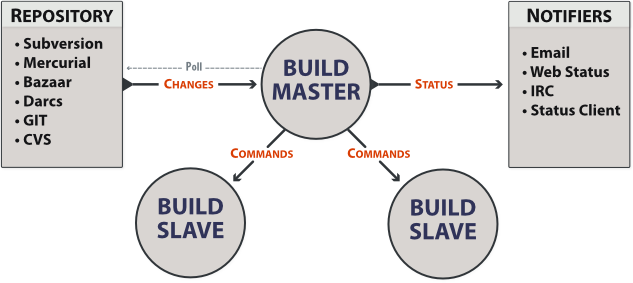

The buildslaves are typically run on a variety of separate machines, at least one per platform of interest. These machines connect to the buildmaster over a TCP connection to a publically-visible port. As a result, the buildslaves can live behind a NAT box or similar firewalls, as long as they can get to buildmaster. The TCP connections are initiated by the buildslave and accepted by the buildmaster, but commands and results travel both ways within this connection. The buildmaster is always in charge, so all commands travel exclusively from the buildmaster to the buildslave.

To perform builds, the buildslaves must typically obtain source code from a CVS/SVN/etc repository. Therefore they must also be able to reach the repository. The buildmaster provides instructions for performing builds, but does not provide the source code itself.

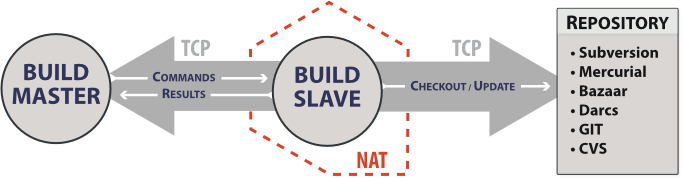

The Buildmaster consists of several pieces:

Each Builder is configured with a list of BuildSlaves that it will use for its builds. These buildslaves are expected to behave identically: the only reason to use multiple BuildSlaves for a single Builder is to provide a measure of load-balancing.

Within a single BuildSlave, each Builder creates its own SlaveBuilder instance. These SlaveBuilders operate independently from each other. Each gets its own base directory to work in. It is quite common to have many Builders sharing the same buildslave. For example, there might be two buildslaves: one for i386, and a second for PowerPC. There may then be a pair of Builders that do a full compile/test run, one for each architecture, and a lone Builder that creates snapshot source tarballs if the full builders complete successfully. The full builders would each run on a single buildslave, whereas the tarball creation step might run on either buildslave (since the platform doesn't matter when creating source tarballs). In this case, the mapping would look like:

Builder(full-i386) -> BuildSlaves(slave-i386)

Builder(full-ppc) -> BuildSlaves(slave-ppc)

Builder(source-tarball) -> BuildSlaves(slave-i386, slave-ppc)

and each BuildSlave would have two SlaveBuilders inside it, one for a full builder, and a second for the source-tarball builder.

Once a SlaveBuilder is available, the Builder pulls one or more BuildRequests off its incoming queue. (It may pull more than one if it determines that it can merge the requests together; for example, there may be multiple requests to build the current HEAD revision). These requests are merged into a single Build instance, which includes the SourceStamp that describes what exact version of the source code should be used for the build. The Build is then randomly assigned to a free SlaveBuilder and the build begins.

The behaviour when BuildRequests are merged can be customized, see Merging BuildRequests.

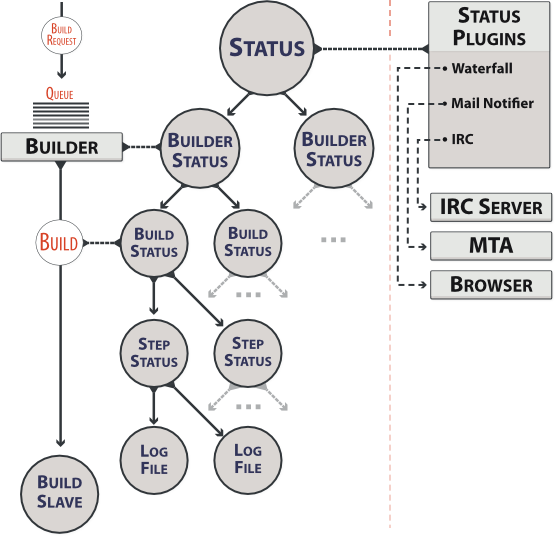

The buildmaster maintains a central Status object, to which various status plugins are connected. Through this Status object, a full hierarchy of build status objects can be obtained.

The configuration file controls which status plugins are active. Each status plugin gets a reference to the top-level Status object. From there they can request information on each Builder, Build, Step, and LogFile. This query-on-demand interface is used by the html.Waterfall plugin to create the main status page each time a web browser hits the main URL.

The status plugins can also subscribe to hear about new Builds as they occur: this is used by the MailNotifier to create new email messages for each recently-completed Build.

The Status object records the status of old builds on disk in the buildmaster's base directory. This allows it to return information about historical builds.

There are also status objects that correspond to Schedulers and BuildSlaves. These allow status plugins to report information about upcoming builds, and the online/offline status of each buildslave.

A day in the life of the buildbot:

Buildbot is shipped in two components: the buildmaster (called buildbot

for legacy reasons) and the buildslave. The buildslave component has far fewer

requirements, and is more broadly compatible than the buildmaster. You will

need to carefully pick the environment in which to run your buildmaster, but

the buildslave should be able to run just about anywhere.

It is possible to install the buildmaster and buildslave on the same system, although for anything but the smallest installation this arrangement will not be very efficient.

At a bare minimum, you'll need the following for both the buildmaster and a buildslave:

Buildbot requires python-2.4 or later.

Both the buildmaster and the buildslaves require Twisted-8.0.x or later. As always, the most recent version is recommended.

Twisted is delivered as a collection of subpackages. You'll need at least "Twisted" (the core package), and you'll also want TwistedMail, TwistedWeb, and TwistedWords (for sending email, serving a web status page, and delivering build status via IRC, respectively). You might also want TwistedConch (for the encrypted Manhole debug port). Note that Twisted requires ZopeInterface to be installed as well.

Of course, your project's build process will impose additional requirements on the buildslaves. These hosts must have all the tools necessary to compile and test your project's source code.

Buildbot - both master and slave - runs well natively on Windows. The slave runs well on Cygwin, but because of problems with SQLite on Cygwin, the master does not.

Buildbot's windows testing is limited to the most recent Twisted and Python versions. For best results, use the most recent available versions of these libraries on Windows.

The sqlite3 package is required for python-2.5 and earlier (it is already included in python-2.5 and later, but the version in python-2.5 has nasty bugs)

The simplejson package is required for python-2.5 and earlier (it is already included as json in python-2.6 and later)

Buildbot requires Jinja version 2.1 or higher

Jinja2 is a general purpose templating language and is used by Buildbot to generate the HTML output.

Buildbot and Buildslave are installed using the standard python

distutils process. For either component, after unpacking the tarball,

the process is:

python setup.py build

python setup.py install

where the install step may need to be done as root. This will put the bulk of

the code in somewhere like /usr/lib/python2.3/site-packages/buildbot. It

will also install the buildbot command-line tool in

/usr/bin/buildbot.

If the environment variable $NO_INSTALL_REQS is set to '1', then

setup.py will not try to install Buildbot's requirements. This is

usually only useful when building a Buildbot package.

To test this, shift to a different directory (like /tmp), and run:

buildbot --version

# or

buildslave --version

If it shows you the versions of Buildbot and Twisted, the install went

ok. If it says no such command or it gets an ImportError

when it tries to load the libaries, then something went wrong.

pydoc buildbot is another useful diagnostic tool.

Windows users will find these files in other places. You will need to

make sure that python can find the libraries, and will probably find

it convenient to have buildbot on your PATH.

If you wish, you can run the buildbot unit test suite like this:

PYTHONPATH=. trial buildbot.test

# or

PYTHONPATH=. trial buildslave.test

Nothing should fail, a few might be skipped. If any of the tests fail, you should stop and investigate the cause before continuing the installation process, as it will probably be easier to track down the bug early.

If you cannot or do not wish to install the buildbot into a site-wide location like /usr or /usr/local, you can also install it into the account's home directory or any other location using a tool like virtualenv.

As you learned earlier (see System Architecture), the buildmaster

runs on a central host (usually one that is publically visible, so

everybody can check on the status of the project), and controls all

aspects of the buildbot system. Let us call this host

buildbot.example.org.

You may wish to create a separate user account for the buildmaster,

perhaps named buildmaster. This can help keep your personal

configuration distinct from that of the buildmaster and is useful if

you have to use a mail-based notification system (see Change Sources). However, the Buildbot will work just fine with your regular

user account.

You need to choose a directory for the buildmaster, called the

basedir. This directory will be owned by the buildmaster, which

will use configuration files therein, and create status files as it

runs. ~/Buildbot is a likely value. If you run multiple

buildmasters in the same account, or if you run both masters and

slaves, you may want a more distinctive name like

~/Buildbot/master/gnomovision or

~/Buildmasters/fooproject. If you are using a separate user

account, this might just be ~buildmaster/masters/fooproject.

Once you've picked a directory, use the buildbot create-master command to create the directory and populate it with startup files:

buildbot create-master -r basedir

You will need to create a configuration file (see Configuration) before starting the buildmaster. Most of the rest of this manual is dedicated to explaining how to do this. A sample configuration file is placed in the working directory, named master.cfg.sample, which can be copied to master.cfg and edited to suit your purposes.

(Internal details: This command creates a file named

buildbot.tac that contains all the state necessary to create

the buildmaster. Twisted has a tool called twistd which can use

this .tac file to create and launch a buildmaster instance. twistd

takes care of logging and daemonization (running the program in the

background). /usr/bin/buildbot is a front end which runs twistd

for you.)

In addition to buildbot.tac, a small Makefile.sample is installed. This can be used as the basis for customized daemon startup, See Launching the daemons.

If you want to use MySQL as the database backend for your Buildbot, add the

--db option to the create-master invocation to specify the

connection string for the MySQL database (see Database Specification).

This section lists options to the create-master command. You can also type buildslave create-slave --help for an up-to-the-moment summary.

--force--no-logrotate--relocatable--config--log-size--log-countNone to keep all twistd.log files

around. The default is 10.

--dbIf you have just installed a new version of the Buildbot code, and you have buildmasters that were created using an older version, you'll need to upgrade these buildmasters before you can use them. The upgrade process adds and modifies files in the buildmaster's base directory to make it compatible with the new code.

buildbot upgrade-master basedir

This command will also scan your master.cfg file for incompatibilities (by loading it and printing any errors or deprecation warnings that occur). Each buildbot release tries to be compatible with configurations that worked cleanly (i.e. without deprecation warnings) on the previous release: any functions or classes that are to be removed will first be deprecated in a release, to give you a chance to start using the replacement.

The upgrade-master command is idempotent. It is safe to run it

multiple times. After each upgrade of the buildbot code, you should

use upgrade-master on all your buildmasters.

In general, Buildbot slaves and masters can be upgraded independently, although some new features will not be available, depending on the master and slave versions.

The 0.7.6 release introduced the public_html/ directory, which

contains index.html and other files served by the

WebStatus and Waterfall status displays. The

upgrade-master command will create these files if they do not

already exist. It will not modify existing copies, but it will write a

new copy in e.g. index.html.new if the new version differs from

the version that already exists.

Buildbot-0.8.0 introduces a database backend, which is SQLite by default. The

upgrade-master command will automatically create and populate this

database with the changes the buildmaster has seen. Note that, as of this

release, build history is not contained in the database, and is thus not

migrated.

The upgrade process renames the Changes pickle ($basedir/changes.pck) to

changes.pck.old once the upgrade is complete. To reverse the upgrade,

simply downgrade Buildbot and move this file back to its original name. You

may also wish to delete the state database (state.sqlite).

The upgrade process assumes that strings in your Changes pickle are encoded in

UTF-8 (or plain ASCII). If this is not the case, and if there are non-UTF-8

characters in the pickle, the upgrade will fail with a suitable error message.

If this occurs, you have two options. If the change history is not important

to your purpose, you can simply delete changes.pck.

If you would like to keep the change history, then you will need to figure out

which encoding is in use, and use contrib/fix_changes_pickle_encoding.py

(see Contrib Scripts) to rewrite the changes pickle into Unicode before

upgrading the master. A typical invocation (with Mac-Roman encoding) might

look like:

$ python $buildbot/contrib/fix_changes_pickle_encoding.py changes.pck macroman

decoding bytestrings in changes.pck using macroman

converted 11392 strings

backing up changes.pck to changes.pck.old

If your Changes pickle uses multiple encodings, you're on your own, but the script in contrib may provide a good starting point for the fix.

Typically, you will be adding a buildslave to an existing buildmaster, to provide additional architecture coverage. The buildbot administrator will give you several pieces of information necessary to connect to the buildmaster. You should also be somewhat familiar with the project being tested, so you can troubleshoot build problems locally.

The buildbot exists to make sure that the project's stated “how to build it” process actually works. To this end, the buildslave should run in an environment just like that of your regular developers. Typically the project build process is documented somewhere (README, INSTALL, etc), in a document that should mention all library dependencies and contain a basic set of build instructions. This document will be useful as you configure the host and account in which the buildslave runs.

Here's a good checklist for setting up a buildslave:

It is recommended (although not mandatory) to set up a separate user

account for the buildslave. This account is frequently named

buildbot or buildslave. This serves to isolate your

personal working environment from that of the slave's, and helps to

minimize the security threat posed by letting possibly-unknown

contributors run arbitrary code on your system. The account should

have a minimum of fancy init scripts.

Follow the instructions given earlier (see Installing the code).

If you use a separate buildslave account, and you didn't install the

buildbot code to a shared location, then you will need to install it

with --home=~ for each account that needs it.

Make sure the host can actually reach the buildmaster. Usually the buildmaster is running a status webserver on the same machine, so simply point your web browser at it and see if you can get there. Install whatever additional packages or libraries the project's INSTALL document advises. (or not: if your buildslave is supposed to make sure that building without optional libraries still works, then don't install those libraries).

Again, these libraries don't necessarily have to be installed to a site-wide shared location, but they must be available to your build process. Accomplishing this is usually very specific to the build process, so installing them to /usr or /usr/local is usually the best approach.

Follow the instructions in the INSTALL document, in the buildslave's account. Perform a full CVS (or whatever) checkout, configure, make, run tests, etc. Confirm that the build works without manual fussing. If it doesn't work when you do it by hand, it will be unlikely to work when the buildbot attempts to do it in an automated fashion.

This should be somewhere in the buildslave's account, typically named after the project which is being tested. The buildslave will not touch any file outside of this directory. Something like ~/Buildbot or ~/Buildslaves/fooproject is appropriate.

When the buildbot admin configures the buildmaster to accept and use your buildslave, they will provide you with the following pieces of information:

Now run the 'buildslave' command as follows:

buildslave create-slave BASEDIR MASTERHOST:PORT SLAVENAME PASSWORD

This will create the base directory and a collection of files inside,

including the buildbot.tac file that contains all the

information you passed to the buildbot command.

When it first connects, the buildslave will send a few files up to the buildmaster which describe the host that it is running on. These files are presented on the web status display so that developers have more information to reproduce any test failures that are witnessed by the buildbot. There are sample files in the info subdirectory of the buildbot's base directory. You should edit these to correctly describe you and your host.

BASEDIR/info/admin should contain your name and email address. This is the “buildslave admin address”, and will be visible from the build status page (so you may wish to munge it a bit if address-harvesting spambots are a concern).

BASEDIR/info/host should be filled with a brief description of the host: OS, version, memory size, CPU speed, versions of relevant libraries installed, and finally the version of the buildbot code which is running the buildslave.

The optional BASEDIR/info/access_uri can specify a URI which will

connect a user to the machine. Many systems accept ssh://hostname URIs

for this purpose.

If you run many buildslaves, you may want to create a single ~buildslave/info file and share it among all the buildslaves with symlinks.

There are a handful of options you might want to use when creating the buildslave with the buildslave create-slave <options> DIR <params> command. You can type buildslave create-slave --help for a summary. To use these, just include them on the buildslave create-slave command line, like this:

buildslave create-slave --umask=022 ~/buildslave buildmaster.example.org:42012 myslavename mypasswd

--no-logrotate--usepty--umask--umask=022 to tell

the buildslave to fix the umask after twistd clobbers it. If you want

build products to be writable by other accounts too, use

--umask=000, but this is likely to be a security problem.

--keepalive--keepalive=120.

If the buildslave is behind a NAT box or stateful firewall, these

messages may help to keep the connection alive: some NAT boxes tend to

forget about a connection if it has not been used in a while. When

this happens, the buildmaster will think that the buildslave has

disappeared, and builds will time out. Meanwhile the buildslave will

not realize than anything is wrong.

--maxdelay--log-size--log-countNone to keep all twistd.log files

around. The default is 10.

unicode_encodingThe default value is what python's sys.getfilesystemencoding() returns, which on Windows is 'mbcs', on Mac OSX is 'utf-8', and on Unix depends on your locale settings.

If you need a different encoding, this can be changed in your build slave's buildbot.tac file by adding a unicode_encoding argument to BuildSlave:

s = BuildSlave(buildmaster_host, port, slavename, passwd, basedir,

keepalive, usepty, umask=umask, maxdelay=maxdelay,

unicode_encoding='utf-8')

If you have just installed a new version of Buildbot-slave, you may need to take some steps to upgrade it. If you are upgrading to version 0.8.2 or later, you can run

buildslave upgrade-slave /path/to/buildslave/dir

Before Buildbot version 0.8.1, the Buildbot master and slave were part of the same distribution. As of version 0.8.1, the buildslave is a separate distribution.

As of this release, you will need to install buildbot-slave to run a slave.

Any automatic startup scripts that had run buildbot start for previous versions

should be changed to run buildslave start instead.

If you are running a version later than 0.8.1, then you can skip the remainder

of this section: the upgrade-slave command will take care of this. If

you are upgrading directly to 0.8.1, read on.

The existing buildbot.tac for any buildslaves running older versions

will need to be edited or replaced. If the loss of cached buildslave state

(e.g., for Source steps in copy mode) is not problematic, the easiest solution

is to simply delete the slave directory and re-run buildslave

create-slave.

If deleting the slave directory is problematic, the change to

buildbot.tac is simple. On line 3, replace

from buildbot.slave.bot import BuildSlave

with

from buildslave.bot import BuildSlave

After this change, the buildslave should start as usual.

Both the buildmaster and the buildslave run as daemon programs. To

launch them, pass the working directory to the buildbot

and buildslave commands, as appropriate:

# start a master

buildbot start BASEDIR

# start a slave

buildslave start SLAVE_BASEDIR

The BASEDIR is option and can be omitted if the current directory contains the buildbot configuration (the buildbot.tac file).

buildbot start

This command will start the daemon and then return, so normally it will not produce any output. To verify that the programs are indeed running, look for a pair of files named twistd.log and twistd.pid that should be created in the working directory. twistd.pid contains the process ID of the newly-spawned daemon.

When the buildslave connects to the buildmaster, new directories will start appearing in its base directory. The buildmaster tells the slave to create a directory for each Builder which will be using that slave. All build operations are performed within these directories: CVS checkouts, compiles, and tests.

Once you get everything running, you will want to arrange for the

buildbot daemons to be started at boot time. One way is to use

cron, by putting them in a @reboot crontab entry1:

@reboot buildbot start BASEDIR

When you run crontab to set this up, remember to do it as the buildmaster or buildslave account! If you add this to your crontab when running as your regular account (or worse yet, root), then the daemon will run as the wrong user, quite possibly as one with more authority than you intended to provide.

It is important to remember that the environment provided to cron jobs

and init scripts can be quite different that your normal runtime.

There may be fewer environment variables specified, and the PATH may

be shorter than usual. It is a good idea to test out this method of

launching the buildslave by using a cron job with a time in the near

future, with the same command, and then check twistd.log to

make sure the slave actually started correctly. Common problems here

are for /usr/local or ~/bin to not be on your

PATH, or for PYTHONPATH to not be set correctly.

Sometimes HOME is messed up too.

Some distributions may include conveniences to make starting buildbot

at boot time easy. For instance, with the default buildbot package in

Debian-based distributions, you may only need to modify

/etc/default/buildbot (see also /etc/init.d/buildbot, which

reads the configuration in /etc/default/buildbot).

Buildbot also comes with its own init scripts that provide support for controlling multi-slave and multi-master setups (mostly because they are based on the init script from the debian package). With a little modification these scripts can be used both on debian and rhel based distributions and may thus prove helpful to package maintainers who are working on buildbot (or those that haven't yet split buildbot into master and slave packages).

# install as /etc/default|sysconfig/buildslave

master/contrib/init-scripts/buildslave.default

# install /etc/default|sysconfig/buildslave

master/contrib/init-scripts/buildmaster.default

# install as /etc/init.d/buildslave

slave/contrib/init-scripts/buildslave.init.sh

# install as /etc/init.d/buildmaster

slave/contrib/init-scripts/buildmaster.init.sh

# ... and tell sysvinit about them

chkconfig buildmaster reset

# ... or

update-rc.d buildmaster defaults

While a buildbot daemon runs, it emits text to a logfile, named

twistd.log. A command like tail -f twistd.log is useful

to watch the command output as it runs.

The buildmaster will announce any errors with its configuration file in the logfile, so it is a good idea to look at the log at startup time to check for any problems. Most buildmaster activities will cause lines to be added to the log.

To stop a buildmaster or buildslave manually, use:

buildbot stop BASEDIR

# or

buildslave stop SLAVE_BASEDIR

This simply looks for the twistd.pid file and kills whatever process is identified within.

At system shutdown, all processes are sent a SIGKILL. The

buildmaster and buildslave will respond to this by shutting down

normally.

The buildmaster will respond to a SIGHUP by re-reading its

config file. Of course, this only works on unix-like systems with

signal support, and won't work on Windows. The following shortcut is

available:

buildbot reconfig BASEDIR

When you update the Buildbot code to a new release, you will need to

restart the buildmaster and/or buildslave before it can take advantage

of the new code. You can do a buildbot stop BASEDIR and

buildbot start BASEDIR in quick succession, or you can

use the restart shortcut, which does both steps for you:

buildbot restart BASEDIR

Buildslaves can similarly be restarted with:

buildslave restart BASEDIR

There are certain configuration changes that are not handled cleanly

by buildbot reconfig. If this occurs, buildbot restart

is a more robust tool to fully switch over to the new configuration.

buildbot restart may also be used to start a stopped Buildbot

instance. This behaviour is useful when writing scripts that stop, start

and restart Buildbot.

A buildslave may also be gracefully shutdown from the see WebStatus status plugin. This is useful to shutdown a buildslave without interrupting any current builds. The buildmaster will wait until the buildslave is finished all its current builds, and will then tell the buildslave to shutdown.

The buildmaster can be configured to send out email notifications when a slave has been offline for a while. Be sure to configure the buildmaster with a contact email address for each slave so these notifications are sent to someone who can bring it back online.

If you find you can no longer provide a buildslave to the project, please let the project admins know, so they can put out a call for a replacement.

The Buildbot records status and logs output continually, each time a

build is performed. The status tends to be small, but the build logs

can become quite large. Each build and log are recorded in a separate

file, arranged hierarchically under the buildmaster's base directory.

To prevent these files from growing without bound, you should

periodically delete old build logs. A simple cron job to delete

anything older than, say, two weeks should do the job. The only trick

is to leave the buildbot.tac and other support files alone, for

which find's -mindepth argument helps skip everything in the

top directory. You can use something like the following:

@weekly cd BASEDIR && find . -mindepth 2 i-path './public_html/*' -prune -o -type f -mtime +14 -exec rm {} \;

@weekly cd BASEDIR && find twistd.log* -mtime +14 -exec rm {} \;

Alternatively, you can configure a maximum number of old logs to be kept

using the --log-count command line option when running buildbot

create-slave or buildbot create-master.

Here are a few hints on diagnosing common problems.

Cron jobs are typically run with a minimal shell (/bin/sh, not

/bin/bash), and tilde expansion is not always performed in such

commands. You may want to use explicit paths, because the PATH

is usually quite short and doesn't include anything set by your

shell's startup scripts (.profile, .bashrc, etc). If

you've installed buildbot (or other python libraries) to an unusual

location, you may need to add a PYTHONPATH specification (note

that python will do tilde-expansion on PYTHONPATH elements by

itself). Sometimes it is safer to fully-specify everything:

@reboot PYTHONPATH=~/lib/python /usr/local/bin/buildbot start /usr/home/buildbot/basedir

Take the time to get the @reboot job set up. Otherwise, things will work fine for a while, but the first power outage or system reboot you have will stop the buildslave with nothing but the cries of sorrowful developers to remind you that it has gone away.

If the buildslave cannot connect to the buildmaster, the reason should be described in the twistd.log logfile. Some common problems are an incorrect master hostname or port number, or a mistyped bot name or password. If the buildslave loses the connection to the master, it is supposed to attempt to reconnect with an exponentially-increasing backoff. Each attempt (and the time of the next attempt) will be logged. If you get impatient, just manually stop and re-start the buildslave.

When the buildmaster is restarted, all slaves will be disconnected, and will

attempt to reconnect as usual. The reconnect time will depend upon how long the

buildmaster is offline (i.e. how far up the exponential backoff curve the

slaves have travelled). Again, buildslave restart BASEDIR will

speed up the process.

While some features of Buildbot are included in the distribution, others are

only available in contrib/ in the source directory. The latest versions

of such scripts are available at

http://github.com/buildbot/buildbot/tree/master/master/contrib.

This chapter defines some of the basic concepts that the Buildbot uses. You'll need to understand how the Buildbot sees the world to configure it properly.

These source trees come from a Version Control System of some kind.

CVS and Subversion are two popular ones, but the Buildbot supports

others. All VC systems have some notion of an upstream

repository which acts as a server2, from which clients

can obtain source trees according to various parameters. The VC

repository provides source trees of various projects, for different

branches, and from various points in time. The first thing we have to

do is to specify which source tree we want to get.

For the purposes of the Buildbot, we will try to generalize all VC systems as having repositories that each provide sources for a variety of projects. Each project is defined as a directory tree with source files. The individual files may each have revisions, but we ignore that and treat the project as a whole as having a set of revisions (CVS is really the only VC system still in widespread use that has per-file revisions.. everything modern has moved to atomic tree-wide changesets). Each time someone commits a change to the project, a new revision becomes available. These revisions can be described by a tuple with two items: the first is a branch tag, and the second is some kind of revision stamp or timestamp. Complex projects may have multiple branch tags, but there is always a default branch. The timestamp may be an actual timestamp (such as the -D option to CVS), or it may be a monotonically-increasing transaction number (such as the change number used by SVN and P4, or the revision number used by Bazaar, or a labeled tag used in CVS)3. The SHA1 revision ID used by Mercurial and Git is also a kind of revision stamp, in that it specifies a unique copy of the source tree, as does a Darcs “context” file.

When we aren't intending to make any changes to the sources we check out (at least not any that need to be committed back upstream), there are two basic ways to use a VC system:

Build personnel or CM staff typically use the first approach: the build that results is (ideally) completely specified by the two parameters given to the VC system: repository and revision tag. This gives QA and end-users something concrete to point at when reporting bugs. Release engineers are also reportedly fond of shipping code that can be traced back to a concise revision tag of some sort.

Developers are more likely to use the second approach: each morning the developer does an update to pull in the changes committed by the team over the last day. These builds are not easy to fully specify: it depends upon exactly when you did a checkout, and upon what local changes the developer has in their tree. Developers do not normally tag each build they produce, because there is usually significant overhead involved in creating these tags. Recreating the trees used by one of these builds can be a challenge. Some VC systems may provide implicit tags (like a revision number), while others may allow the use of timestamps to mean “the state of the tree at time X” as opposed to a tree-state that has been explicitly marked.

The Buildbot is designed to help developers, so it usually works in terms of the latest sources as opposed to specific tagged revisions. However, it would really prefer to build from reproducible source trees, so implicit revisions are used whenever possible.

So for the Buildbot's purposes we treat each VC system as a server which can take a list of specifications as input and produce a source tree as output. Some of these specifications are static: they are attributes of the builder and do not change over time. Others are more variable: each build will have a different value. The repository is changed over time by a sequence of Changes, each of which represents a single developer making changes to some set of files. These Changes are cumulative.

For normal builds, the Buildbot wants to get well-defined source trees that contain specific Changes, and exclude other Changes that may have occurred after the desired ones. We assume that the Changes arrive at the buildbot (through one of the mechanisms described in see Change Sources) in the same order in which they are committed to the repository. The Buildbot waits for the tree to become “stable” before initiating a build, for two reasons. The first is that developers frequently make multiple related commits in quick succession, even when the VC system provides ways to make atomic transactions involving multiple files at the same time. Running a build in the middle of these sets of changes would use an inconsistent set of source files, and is likely to fail (and is certain to be less useful than a build which uses the full set of changes). The tree-stable-timer is intended to avoid these useless builds that include some of the developer's changes but not all. The second reason is that some VC systems (i.e. CVS) do not provide repository-wide transaction numbers, so that timestamps are the only way to refer to a specific repository state. These timestamps may be somewhat ambiguous, due to processing and notification delays. By waiting until the tree has been stable for, say, 10 minutes, we can choose a timestamp from the middle of that period to use for our source checkout, and then be reasonably sure that any clock-skew errors will not cause the build to be performed on an inconsistent set of source files.

The Schedulers always use the tree-stable-timer, with a timeout that is configured to reflect a reasonable tradeoff between build latency and change frequency. When the VC system provides coherent repository-wide revision markers (such as Subversion's revision numbers, or in fact anything other than CVS's timestamps), the resulting Build is simply performed against a source tree defined by that revision marker. When the VC system does not provide this, a timestamp from the middle of the tree-stable period is used to generate the source tree4.

For CVS, the static specifications are repository and

module. In addition to those, each build uses a timestamp (or

omits the timestamp to mean the latest) and branch tag

(which defaults to HEAD). These parameters collectively specify a set

of sources from which a build may be performed.

Subversion combines the

repository, module, and branch into a single Subversion URL

parameter. Within that scope, source checkouts can be specified by a

numeric revision number (a repository-wide

monotonically-increasing marker, such that each transaction that

changes the repository is indexed by a different revision number), or

a revision timestamp. When branches are used, the repository and

module form a static baseURL, while each build has a

revision number and a branch (which defaults to a

statically-specified defaultBranch). The baseURL and

branch are simply concatenated together to derive the

svnurl to use for the checkout.

Perforce is similar. The server

is specified through a P4PORT parameter. Module and branch

are specified in a single depot path, and revisions are

depot-wide. When branches are used, the p4base and

defaultBranch are concatenated together to produce the depot

path.

Bzr (which is a descendant of Arch/Bazaar, and is frequently referred to as “Bazaar”) has the same sort of repository-vs-workspace model as Arch, but the repository data can either be stored inside the working directory or kept elsewhere (either on the same machine or on an entirely different machine). For the purposes of Buildbot (which never commits changes), the repository is specified with a URL and a revision number.

The most common way to obtain read-only access to a bzr tree is via

HTTP, simply by making the repository visible through a web server

like Apache. Bzr can also use FTP and SFTP servers, if the buildslave

process has sufficient privileges to access them. Higher performance

can be obtained by running a special Bazaar-specific server. None of

these matter to the buildbot: the repository URL just has to match the

kind of server being used. The repoURL argument provides the

location of the repository.

Branches are expressed as subdirectories of the main central

repository, which means that if branches are being used, the BZR step

is given a baseURL and defaultBranch instead of getting

the repoURL argument.

Darcs doesn't really have the

notion of a single master repository. Nor does it really have

branches. In Darcs, each working directory is also a repository, and

there are operations to push and pull patches from one of these

repositories to another. For the Buildbot's purposes, all you

need to do is specify the URL of a repository that you want to build

from. The build slave will then pull the latest patches from that

repository and build them. Multiple branches are implemented by using

multiple repositories (possibly living on the same server).

Builders which use Darcs therefore have a static repourl which

specifies the location of the repository. If branches are being used,

the source Step is instead configured with a baseURL and a

defaultBranch, and the two strings are simply concatenated

together to obtain the repository's URL. Each build then has a

specific branch which replaces defaultBranch, or just uses the

default one. Instead of a revision number, each build can have a

“context”, which is a string that records all the patches that are

present in a given tree (this is the output of darcs changes

--context, and is considerably less concise than, e.g. Subversion's

revision number, but the patch-reordering flexibility of Darcs makes

it impossible to provide a shorter useful specification).

Mercurial is like Darcs, in that

each branch is stored in a separate repository. The repourl,

baseURL, and defaultBranch arguments are all handled the

same way as with Darcs. The “revision”, however, is the hash

identifier returned by hg identify.

Git also follows a decentralized model, and

each repository can have several branches and tags. The source Step is

configured with a static repourl which specifies the location

of the repository. In addition, an optional branch parameter

can be specified to check out code from a specific branch instead of

the default “master” branch. The “revision” is specified as a SHA1

hash as returned by e.g. git rev-parse. No attempt is made

to ensure that the specified revision is actually a subset of the

specified branch.

Each Change has a who attribute, which specifies which developer is

responsible for the change. This is a string which comes from a namespace

controlled by the VC repository. Frequently this means it is a username on the

host which runs the repository, but not all VC systems require this. Each

StatusNotifier will map the who attribute into something appropriate for

their particular means of communication: an email address, an IRC handle, etc.

It also has a list of files, which are just the tree-relative

filenames of any files that were added, deleted, or modified for this

Change. These filenames are used by the fileIsImportant

function (in the Scheduler) to decide whether it is worth triggering a

new build or not, e.g. the function could use the following function

to only run a build if a C file were checked in:

def has_C_files(change):

for name in change.files:

if name.endswith(".c"):

return True

return False

Certain BuildSteps can also use the list of changed files

to run a more targeted series of tests, e.g. the

python_twisted.Trial step can run just the unit tests that

provide coverage for the modified .py files instead of running the

full test suite.

The Change also has a comments attribute, which is a string

containing any checkin comments.

A change's project, by default the empty string, describes the source code that changed. It is a free-form string which the buildbot administrator can use to flexibly discriminate among changes.

Generally, a project is an independently-buildable unit of source. This field can be used to apply different build steps to different projects. For example, an open-source application might build its Windows client from a separate codebase than its POSIX server. In this case, the change sources should be configured to attach an appropriate project string (say, "win-client" and "server") to changes from each codebase. Schedulers would then examine these strings and trigger the appropriate builders for each project.

A change occurs within the context of a specific repository. This is generally specified with a string, and for most version-control systems, this string takes the form of a URL.

Changes can be filtered on repository, but more often this field is used as a hint for the build steps to figure out which code to check out.

Each Change can have a revision attribute, which describes how

to get a tree with a specific state: a tree which includes this Change

(and all that came before it) but none that come after it. If this

information is unavailable, the .revision attribute will be

None. These revisions are provided by the ChangeSource, and

consumed by the computeSourceRevision method in the appropriate

source.Source class.

revision is an int, seconds since the epoch

revision is an int, the changeset number (r%d)

revision is a large string, the output of darcs changes --context

revision is a short string (a hash ID), the output of hg identify

revision is an int, the transaction number

revision is a short string (a SHA1 hash), the output of e.g.

git rev-parse

The Change might also have a branch attribute. This indicates

that all of the Change's files are in the same named branch. The

Schedulers get to decide whether the branch should be built or not.

For VC systems like CVS, and Git, the branch

name is unrelated to the filename. (that is, the branch name and the

filename inhabit unrelated namespaces). For SVN, branches are

expressed as subdirectories of the repository, so the file's

“svnurl” is a combination of some base URL, the branch name, and the

filename within the branch. (In a sense, the branch name and the

filename inhabit the same namespace). Darcs branches are

subdirectories of a base URL just like SVN. Mercurial branches are the

same as Darcs.

A Change may have one or more properties attached to it, usually specified through the Force Build form or see sendchange. Properties are discussed in detail in the see Build Properties section.

Finally, the Change might have a links list, which is intended

to provide a list of URLs to a viewcvs-style web page that

provides more detail for this Change, perhaps including the full file

diffs.

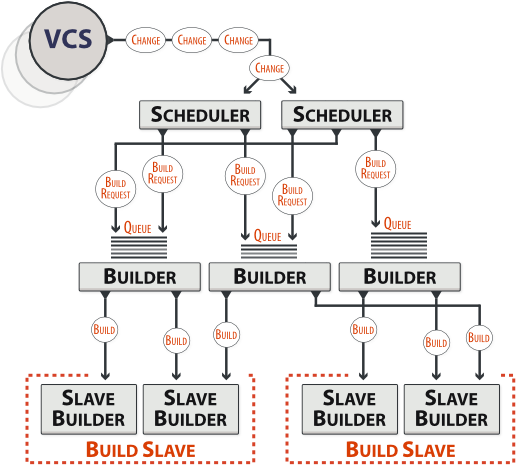

Each Buildmaster has a set of Scheduler objects, each of which

gets a copy of every incoming Change. The Schedulers are responsible

for deciding when Builds should be run. Some Buildbot installations

might have a single Scheduler, while others may have several, each for

a different purpose.

For example, a “quick” scheduler might exist to give immediate

feedback to developers, hoping to catch obvious problems in the code

that can be detected quickly. These typically do not run the full test

suite, nor do they run on a wide variety of platforms. They also

usually do a VC update rather than performing a brand-new checkout

each time. You could have a “quick” scheduler which used a 30 second

timeout, and feeds a single “quick” Builder that uses a VC

mode='update' setting.

A separate “full” scheduler would run more comprehensive tests a

little while later, to catch more subtle problems. This scheduler

would have a longer tree-stable-timer, maybe 30 minutes, and would

feed multiple Builders (with a mode= of 'copy',

'clobber', or 'export').

The tree-stable-timer and fileIsImportant decisions are

made by the Scheduler. Dependencies are also implemented here.

Periodic builds (those which are run every N seconds rather than after

new Changes arrive) are triggered by a special Periodic

Scheduler subclass. The default Scheduler class can also be told to

watch for specific branches, ignoring Changes on other branches. This

may be useful if you have a trunk and a few release branches which

should be tracked, but when you don't want to have the Buildbot pay

attention to several dozen private user branches.

When the setup has multiple sources of Changes the category

can be used for Scheduler objects to filter out a subset

of the Changes. Note that not all change sources can attach a category.

Some Schedulers may trigger builds for other reasons, other than recent Changes. For example, a Scheduler subclass could connect to a remote buildmaster and watch for builds of a library to succeed before triggering a local build that uses that library.

Each Scheduler creates and submits BuildSet objects to the

BuildMaster, which is then responsible for making sure the

individual BuildRequests are delivered to the target

Builders.

Scheduler instances are activated by placing them in the

c['schedulers'] list in the buildmaster config file. Each

Scheduler has a unique name.

A BuildSet is the name given to a set of Builds that all

compile/test the same version of the tree on multiple Builders. In

general, all these component Builds will perform the same sequence of

Steps, using the same source code, but on different platforms or

against a different set of libraries.

The BuildSet is tracked as a single unit, which fails if any of

the component Builds have failed, and therefore can succeed only if

all of the component Builds have succeeded. There are two kinds

of status notification messages that can be emitted for a BuildSet:

the firstFailure type (which fires as soon as we know the

BuildSet will fail), and the Finished type (which fires once

the BuildSet has completely finished, regardless of whether the

overall set passed or failed).

A BuildSet is created with a source stamp tuple of

(branch, revision, changes, patch), some of which may be None, and a

list of Builders on which it is to be run. They are then given to the

BuildMaster, which is responsible for creating a separate

BuildRequest for each Builder.

There are a couple of different likely values for the

SourceStamp:

(revision=None, changes=[CHANGES], patch=None)SourceStamp used when a series of Changes have

triggered a build. The VC step will attempt to check out a tree that

contains CHANGES (and any changes that occurred before CHANGES, but

not any that occurred after them).

(revision=None, changes=None, patch=None)SourceStamp that would be used on a Build that was

triggered by a user request, or a Periodic scheduler. It is also

possible to configure the VC Source Step to always check out the

latest sources rather than paying attention to the Changes in the

SourceStamp, which will result in same behavior as this.

(branch=BRANCH, revision=None, changes=None, patch=None)(revision=REV, changes=None, patch=(LEVEL, DIFF, SUBDIR_ROOT))patch -pLEVEL <DIFF) from inside the relative

directory SUBDIR_ROOT. Item SUBDIR_ROOT is optional and defaults to the

builder working directory. The try feature uses this kind of

SourceStamp. If patch is None, the patching step is

bypassed.

The buildmaster is responsible for turning the BuildSet into a

set of BuildRequest objects and queueing them on the

appropriate Builders.

A BuildRequest is a request to build a specific set of sources

on a single specific Builder. Each Builder runs the

BuildRequest as soon as it can (i.e. when an associated

buildslave becomes free). BuildRequests are prioritized from

oldest to newest, so when a buildslave becomes free, the

Builder with the oldest BuildRequest is run.

The BuildRequest contains the SourceStamp specification.

The actual process of running the build (the series of Steps that will

be executed) is implemented by the Build object. In this future

this might be changed, to have the Build define what

gets built, and a separate BuildProcess (provided by the

Builder) to define how it gets built.

The BuildRequest may be mergeable with other compatible

BuildRequests. Builds that are triggered by incoming Changes

will generally be mergeable. Builds that are triggered by user

requests are generally not, unless they are multiple requests to build

the latest sources of the same branch.

The Buildmaster runs a collection of Builders, each of which handles a single type of build (e.g. full versus quick), on one or more build slaves. Builders serve as a kind of queue for a particular type of build. Each Builder gets a separate column in the waterfall display. In general, each Builder runs independently (although various kinds of interlocks can cause one Builder to have an effect on another).

Each builder is a long-lived object which controls a sequence of Builds. Each Builder is created when the config file is first parsed, and lives forever (or rather until it is removed from the config file). It mediates the connections to the buildslaves that do all the work, and is responsible for creating the Build objects - see Build.

Each builder gets a unique name, and the path name of a directory where it gets to do all its work (there is a buildmaster-side directory for keeping status information, as well as a buildslave-side directory where the actual checkout/compile/test commands are executed).

A builder also has a BuildFactory, which is responsible for creating new Build instances: because the Build instance is what actually performs each build, choosing the BuildFactory is the way to specify what happens each time a build is done (see Build).

Each builder is associated with one of more BuildSlaves. A builder which is used to perform Mac OS X builds (as opposed to Linux or Solaris builds) should naturally be associated with a Mac buildslave.

If multiple buildslaves are available for any given builder, you will have some measure of redundancy: in case one slave goes offline, the others can still keep the Builder working. In addition, multiple buildslaves will allow multiple simultaneous builds for the same Builder, which might be useful if you have a lot of forced or “try” builds taking place.

If you use this feature, it is important to make sure that the buildslaves are all, in fact, capable of running the given build. The slave hosts should be configured similarly, otherwise you will spend a lot of time trying (unsuccessfully) to reproduce a failure that only occurs on some of the buildslaves and not the others. Different platforms, operating systems, versions of major programs or libraries, all these things mean you should use separate Builders.

A build is a single compile or test run of a particular version of the source code, and is comprised of a series of steps. It is ultimately up to you what constitutes a build, but for compiled software it is generally the checkout, configure, make, and make check sequence. For interpreted projects like Python modules, a build is generally a checkout followed by an invocation of the bundled test suite.

The builder which creates a build specifies its steps via a BuildFactory; the build then executes the steps and records their results.

Buildbot has a somewhat limited awareness of users. It assumes the world consists of a set of developers, each of whom can be described by a couple of simple attributes. These developers make changes to the source code, causing builds which may succeed or fail.

Each developer is primarily known through the source control system. Each

Change object that arrives is tagged with a who field that

typically gives the account name (on the repository machine) of the user

responsible for that change. This string is the primary key by which the

User is known, and is displayed on the HTML status pages and in each Build's

“blamelist”.

To do more with the User than just refer to them, this username needs to be mapped into an address of some sort. The responsibility for this mapping is left up to the status module which needs the address. The core code knows nothing about email addresses or IRC nicknames, just user names.

Each change has a single user who is responsible for it. Most builds have a set of changes: the build generally represents the first time these changes have been built and tested by the Buildbot. The build has a “blamelist” that is the union of the users responsible for all the build's changes.

The build provides a list of users who are interested in the build – the “interested users”. Usually this is equal to the blamelist, but may also be expanded, e.g., to include the current build sherrif or a module's maintainer.

If desired, the buildbot can notify the interested users until the problem is resolved.

The buildbot.status.mail.MailNotifier class

(see MailNotifier) provides a status target which can send email

about the results of each build. It accepts a static list of email

addresses to which each message should be delivered, but it can also

be configured to send mail to the Build's Interested Users. To do

this, it needs a way to convert User names into email addresses.

For many VC systems, the User Name is actually an account name on the system which hosts the repository. As such, turning the name into an email address is a simple matter of appending “@repositoryhost.com”. Some projects use other kinds of mappings (for example the preferred email address may be at “project.org” despite the repository host being named “cvs.project.org”), and some VC systems have full separation between the concept of a user and that of an account on the repository host (like Perforce). Some systems (like Git) put a full contact email address in every change.

To convert these names to addresses, the MailNotifier uses an EmailLookup

object. This provides a .getAddress method which accepts a name and

(eventually) returns an address. The default MailNotifier

module provides an EmailLookup which simply appends a static string,

configurable when the notifier is created. To create more complex behaviors

(perhaps using an LDAP lookup, or using “finger” on a central host to

determine a preferred address for the developer), provide a different object

as the lookup argument.

In the future, when the Problem mechanism has been set up, the Buildbot will need to send mail to arbitrary Users. It will do this by locating a MailNotifier-like object among all the buildmaster's status targets, and asking it to send messages to various Users. This means the User-to-address mapping only has to be set up once, in your MailNotifier, and every email message the buildbot emits will take advantage of it.

Like MailNotifier, the buildbot.status.words.IRC class

provides a status target which can announce the results of each build. It

also provides an interactive interface by responding to online queries

posted in the channel or sent as private messages.

In the future, the buildbot can be configured map User names to IRC

nicknames, to watch for the recent presence of these nicknames, and to

deliver build status messages to the interested parties. Like

MailNotifier does for email addresses, the IRC object

will have an IRCLookup which is responsible for nicknames. The

mapping can be set up statically, or it can be updated by online users

themselves (by claiming a username with some kind of “buildbot: i am

user warner” commands).

Once the mapping is established, the rest of the buildbot can ask the

IRC object to send messages to various users. It can report on

the likelihood that the user saw the given message (based upon how long the

user has been inactive on the channel), which might prompt the Problem

Hassler logic to send them an email message instead.

The Buildbot also offers a desktop status client interface which can display real-time build status in a GUI panel on the developer's desktop.

Each build has a set of “Build Properties”, which can be used by its BuildSteps to modify their actions. These properties, in the form of key-value pairs, provide a general framework for dynamically altering the behavior of a build based on its circumstances.

Properties come from a number of places:

Properties are very flexible, and can be used to implement all manner of functionality. Here are some examples:

Most Source steps record the revision that they checked out in

the got_revision property. A later step could use this

property to specify the name of a fully-built tarball, dropped in an

easily-acessible directory for later testing.

Some projects want to perform nightly builds as well as bulding in response to

committed changes. Such a project would run two schedulers, both pointing to

the same set of builders, but could provide an is_nightly property so

that steps can distinguish the nightly builds, perhaps to run more

resource-intensive tests.

Some projects have different build processes on different systems. Rather than create a build factory for each slave, the steps can use buildslave properties to identify the unique aspects of each slave and adapt the build process dynamically.

The buildbot's behavior is defined by the “config file”, which

normally lives in the master.cfg file in the buildmaster's base

directory (but this can be changed with an option to the

buildbot create-master command). This file completely specifies

which Builders are to be run, which slaves they should use, how

Changes should be tracked, and where the status information is to be

sent. The buildmaster's buildbot.tac file names the base

directory; everything else comes from the config file.

A sample config file was installed for you when you created the buildmaster, but you will need to edit it before your buildbot will do anything useful.

This chapter gives an overview of the format of this file and the various sections in it. You will need to read the later chapters to understand how to fill in each section properly.

The config file is, fundamentally, just a piece of Python code which

defines a dictionary named BuildmasterConfig, with a number of

keys that are treated specially. You don't need to know Python to do

basic configuration, though, you can just copy the syntax of the

sample file. If you are comfortable writing Python code,

however, you can use all the power of a full programming language to

achieve more complicated configurations.

The BuildmasterConfig name is the only one which matters: all

other names defined during the execution of the file are discarded.

When parsing the config file, the Buildmaster generally compares the

old configuration with the new one and performs the minimum set of

actions necessary to bring the buildbot up to date: Builders which are

not changed are left untouched, and Builders which are modified get to

keep their old event history.

The beginning of the master.cfg file typically starts with something like:

BuildmasterConfig = c = {}

Therefore a config key of change_source will usually appear in

master.cfg as c['change_source'].

See Configuration Index for a full list of BuildMasterConfig

keys.

Python comments start with a hash character (“#”), tuples are defined with

(parenthesis, pairs), and lists (arrays) are defined with [square,

brackets]. Tuples and lists are mostly interchangeable. Dictionaries (data

structures which map “keys” to “values”) are defined with curly braces:

{'key1': 'value1', 'key2': 'value2'} . Function calls (and object

instantiation) can use named parameters, like w =

html.Waterfall(http_port=8010).

The config file starts with a series of import statements, which make

various kinds of Steps and Status targets available for later use. The main

BuildmasterConfig dictionary is created, then it is populated with a

variety of keys, described section-by-section in subsequent chapters.

The following symbols are automatically available for use in the configuration file.

basediros.path.expanduser(os.path.join(basedir, 'master.cfg'))

The config file is only read at specific points in time. It is first read when the buildmaster is launched. Once it is running, there are various ways to ask it to reload the config file.

If you are on the system hosting the buildmaster, you can send a SIGHUP

signal to it: the buildbot tool has a shortcut for this:

buildbot reconfig BASEDIR

This command will show you all of the lines from twistd.log that relate to the reconfiguration. If there are any problems during the config-file reload, they will be displayed in these lines.

Buildbot's reconfiguration system is fragile for a few difficult-to-fix reasons:

The debug tool (buildbot debugclient --master HOST:PORT) has a

“Reload .cfg” button which will also trigger a reload. In the

future, there will be other ways to accomplish this step (probably a

password-protected button on the web page, as well as a privileged IRC

command).

When reloading the config file, the buildmaster will endeavor to change as little as possible about the running system. For example, although old status targets may be shut down and new ones started up, any status targets that were not changed since the last time the config file was read will be left running and untouched. Likewise any Builders which have not been changed will be left running. If a Builder is modified (say, the build process is changed) while a Build is currently running, that Build will keep running with the old process until it completes. Any previously queued Builds (or Builds which get queued after the reconfig) will use the new process.

To verify that the config file is well-formed and contains no deprecated or invalid elements, use the “checkconfig” command, passing it either a master directory or a config file.

% buildbot checkconfig master.cfg

Config file is good!

# or

% buildbot checkconfig /tmp/masterdir

Config file is good!

If the config file has deprecated features (perhaps because you've upgraded the buildmaster and need to update the config file to match), they will be announced by checkconfig. In this case, the config file will work, but you should really remove the deprecated items and use the recommended replacements instead:

% buildbot checkconfig master.cfg

/usr/lib/python2.4/site-packages/buildbot/master.py:559: DeprecationWarning: c['sources'] is

deprecated as of 0.7.6 and will be removed by 0.8.0 . Please use c['change_source'] instead.

warnings.warn(m, DeprecationWarning)

Config file is good!

If the config file is simply broken, that will be caught too:

% buildbot checkconfig master.cfg

Traceback (most recent call last):

File "/usr/lib/python2.4/site-packages/buildbot/scripts/runner.py", line 834, in doCheckConfig

ConfigLoader(configFile)

File "/usr/lib/python2.4/site-packages/buildbot/scripts/checkconfig.py", line 31, in __init__

self.loadConfig(configFile)

File "/usr/lib/python2.4/site-packages/buildbot/master.py", line 480, in loadConfig

exec f in localDict

File "/home/warner/BuildBot/master/foolscap/master.cfg", line 90, in ?

c[bogus] = "stuff"

NameError: name 'bogus' is not defined

The keys in this section affect the operations of the buildmaster globally.

Buildbot requires a connection to a database to maintain certain state

information, such as tracking pending build requests. By default this is

stored in a sqlite file called 'state.sqlite' in the base directory of your

master. This can be overridden with the db_url parameter.

This parameter is of the form:

driver://[username:password@]host:port/database[?args]

For sqlite databases, since there is no host and port, relative paths are

specified with sqlite:/// and absolute paths with sqlite:////

c['db_url'] = "sqlite:///state.sqlite"

c['db_url'] = "mysql://user:pass@somehost.com/database_name?max_idle=300"

The max_idle argument for MySQL connections should be set to something

less than the wait_timeout configured for your server. This ensures that

connections are closed and re-opened after the configured amount of idle time.

If you see errors such as _mysql_exceptions.OperationalError: (2006,

'MySQL server has gone away'), this means your max_idle setting is

probably too high. show global variables like 'wait_timeout'; will show

what the currently configured wait_timeout is on your MySQL server.

Normally buildbot operates using a single master process that uses the configured database to save state.

It is possible to configure buildbot to have multiple master processes that share state in the same database. This has been well tested using a MySQL database. There are several benefits of Multi-master mode:

State that is shared in the database includes:

Because of this shared state, you are strongly encouraged to:

One suggested configuration is to have one buildbot master configured with just the scheduler and change sources; and then other masters configured with just the builders.

To enable multi-master mode in this configuration, you will need to set the

multiMaster option so that buildbot doesn't warn about missing

schedulers or builders. You will also need to set db_poll_interval

to the masters with only builders check the database for new build requests

at the configured interval.

# Enable multiMaster mode; disables warnings about unknown builders and

# schedulers

c['multiMaster'] = True

# Check for new build requests every 60 seconds

c['db_poll_interval'] = 60

There are a couple of basic settings that you use to tell the buildbot what it is working on. This information is used by status reporters to let users find out more about the codebase being exercised by this particular Buildbot installation.

Note that these parameters were added long before Buildbot became able to build multiple projects in a single buildmaster, and thus assume that there is only one project. While the configuration parameter names may be confusing, a suitable choice of name and URL should help users avoid any confusion.

c['projectName'] = "Buildbot"

c['projectURL'] = "http://buildbot.sourceforge.net/"

c['buildbotURL'] = "http://localhost:8010/"

projectName is a short string that will be used to describe the

project that this buildbot is working on. For example, it is used as

the title of the waterfall HTML page.

projectURL is a string that gives a URL for the project as a

whole. HTML status displays will show projectName as a link to

projectURL, to provide a link from buildbot HTML pages to your

project's home page.

The buildbotURL string should point to the location where the buildbot's

internal web server is visible. This typically uses the port number set when

you create the Waterfall object: the buildbot needs your help to figure

out a suitable externally-visible host name.

When status notices are sent to users (either by email or over IRC),

buildbotURL will be used to create a URL to the specific build

or problem that they are being notified about. It will also be made

available to queriers (over IRC) who want to find out where to get

more information about this buildbot.

c['logCompressionLimit'] = 16384

c['logCompressionMethod'] = 'gz'

c['logMaxSize'] = 1024*1024 # 1M

c['logMaxTailSize'] = 32768

The logCompressionLimit enables compression of build logs on

disk for logs that are bigger than the given size, or disables that

completely if set to False. The default value is 4k, which should

be a reasonable default on most file systems. This setting has no impact

on status plugins, and merely affects the required disk space on the

master for build logs.

The logCompressionMethod controls what type of compression is used for

build logs. The default is 'bz2', the other valid option is 'gz'. 'bz2'

offers better compression at the expense of more CPU time.

The logMaxSize parameter sets an upper limit (in bytes) to how large

logs from an individual build step can be. The default value is None, meaning

no upper limit to the log size. Any output exceeding logMaxSize will be

truncated, and a message to this effect will be added to the log's HEADER

channel.

If logMaxSize is set, and the output from a step exceeds the maximum,

the logMaxTailSize parameter controls how much of the end of the build

log will be kept. The effect of setting this parameter is that the log will

contain the first logMaxSize bytes and the last logMaxTailSize

bytes of output. Don't set this value too high, as the the tail of the log is

kept in memory.

c['changeHorizon'] = 200

c['buildHorizon'] = 100

c['eventHorizon'] = 50

c['logHorizon'] = 40

c['buildCacheSize'] = 15

c['changeCacheSize'] = 10000

Buildbot stores historical information on disk in the form of "Pickle" files and compressed logfiles. In a large installation, these can quickly consume disk space, yet in many cases developers never consult this historical information.